Lessons

Lesson 1 - General Overview of Global Energy Markets

The links below provide an outline of the material for this lesson. Be sure to read carefully through the entire lesson before returning to Canvas to submit your assignments.

Lesson 1 Overview

Lesson 1 Overview

Overview

This lesson will discuss the way that energy decision makers and stakeholders look at development of new projects and technologies. We will define the motivation for the entire EME 801 Course. We will also hopefully allow students to be able to take a holistic view of energy markets and place them in a broader context to understand how various stakeholders will act in various situations. By training ourselves to ask these questions, we will be able to participate in and even help drive the energy transition.

Please note that we will be convening as a team to discuss this course's subject matter as well as any other energy transition matters the cohort would like to cover.

Learning Outcomes

By the end of this lesson, you should be able to:

- Discuss topics related to global energy markets

- Explain the importance of energy markets in an economic context, a geo-political context, and an environmental context

- Explain the placement of the current energy transition in a historic context

- Describe the sources and sinks of energy in the United States energy eco-system

- Develop a global list of stakeholders for energy decisions

- State your preference for the time/day of a recurring class Zoom call

Reading Materials

Each of the reading assignments below is meant to introduce the importance of -- and give a taste of -- the structure of global energy markets. While reading these, please think about how to describe how you think energy questions affect the near and long-term future of your business and our society.

- World Economic Forum (2022). 6 Ways Russian's Invasion of Ukraine Has Reshaped the Energy World [1]

- TheNationalNews.com (2022). BP Signs Long-Term LNG Contract with China's Shenzhen Energy [2]

- U.S. Energy Information Administration (2020). Nonfossil sources accounted for 20% of US Energy Consumption in 2019 [3]

- Bond, K, and Butler-Sloss, S. (2022). Peaking: A Brief History of Select Energy Transitions [4]

- World Economic Forum (2022). The 200-year History of Mankind's Energy Transitions [5]

What is due for Lesson 1?

This lesson will take us one week to complete. Please refer to the Course Calendar for specific due dates. Specific directions for assignments are in the Lesson 1 module in Canvas.

- Complete all of the Lesson 1 readings and viewings, including the lesson content

Questions?

If you have any questions, please post them to our Questions? discussion forum (not email). I will not be reviewing these. I encourage you to work as a cohort in that space. If you do require assistance, please reach out to me directly after you have worked with your cohort --- I am always happy to get on a one-on-one call, or even better, with a group of you.

The Importance of Energy to the Economy, the Environment, and the World

The Importance of Energy to the Economy, the Environment, and the World

The Economy

As we walk through our daily lives, it becomes very easy to take for granted the almost complete dependence we have, as humans in a post-industrial economy, on the incredible supply of inexpensive and convertible energy. If we live in anything other than a tropical climate, we require heating so that we don’t freeze. We use energy to till our fields and harvest our food. We also use energy to provide light during non-daylight hours as well as power machines that allow for inexpensive manufactures such as clothing and other household items. We move about, except when walking or bicycling, through the conversion of some sort of potential energy into the kinetic energy of the plane, train, automobile, or boat. And finally, we use energy to communicate and store and manipulate data. This vast network of comfort, nourishment, nutrition, transportation and information would be utterly impossible without inexpensive and readily dispatchable energy. It is literally the life’s blood of the entire economic system.

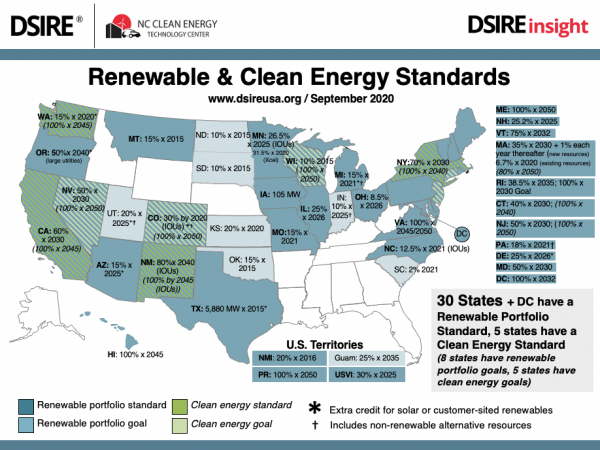

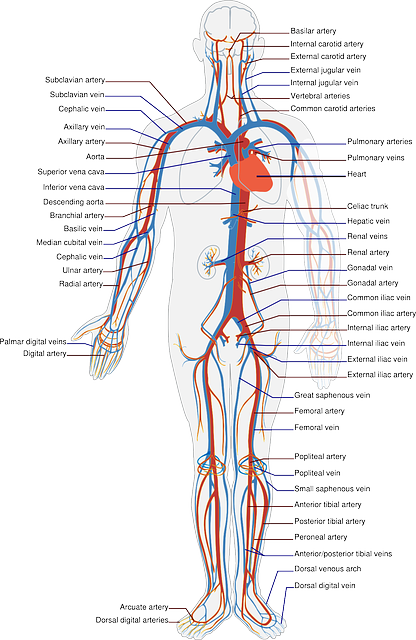

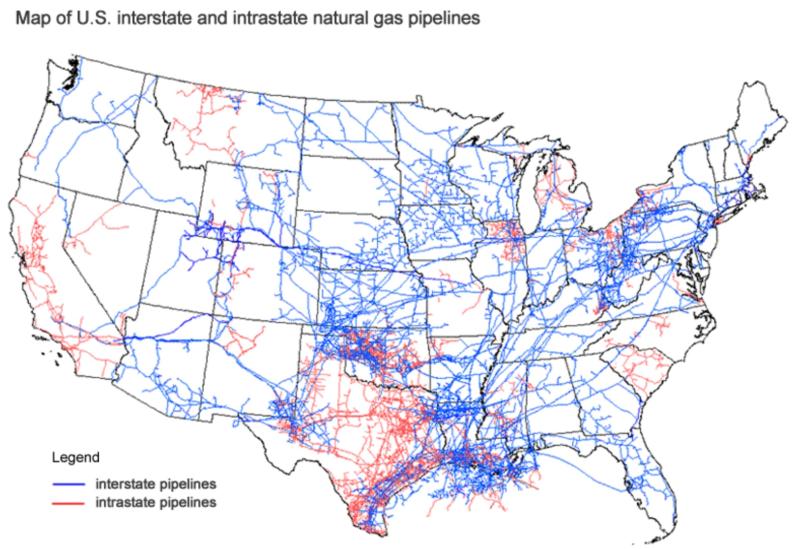

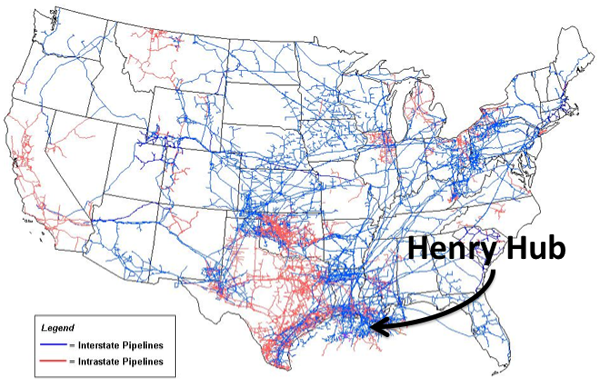

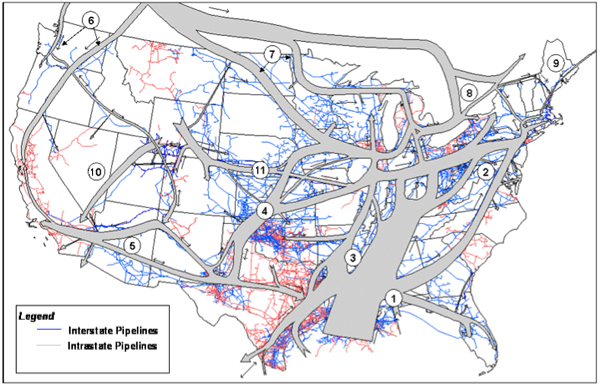

The resemblance between the human circulatory system and the natural gas grid (or the electric transmission and distribution grid) is similar, and the energy delivery system is almost as crucial to the economy as the circulatory system is to the human.

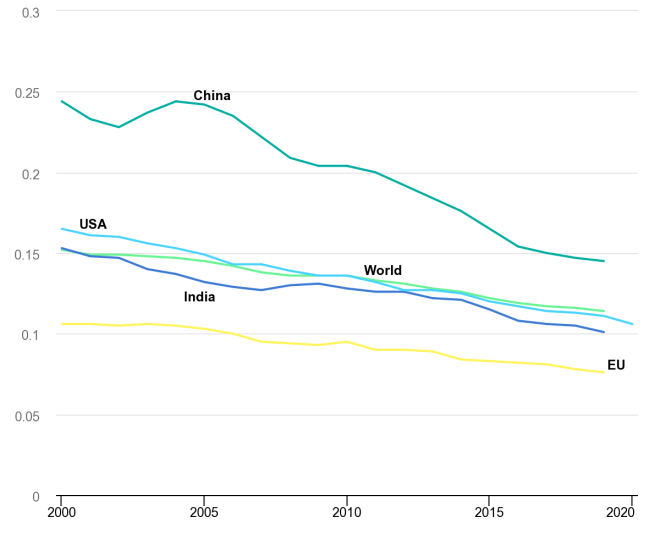

This chart shows the dependence on energy of various economies around the world. This is directly showing Tons of Oil equivalent to make $1,000 of GDP. A tonne of oil is equivalent to 7.44 barrels or 11,630 kWh. The implications are massive. Every 1,000 dollars of GDP in the US has at least 1 MWh or almost a barrel of oil embedded in it. More importantly, this offers no quantification of the risk to the economy should this energy supply be disrupted. Then the energy intensity would increase massively and whole supply chains and communication networks would be disrupted.

| USA | India | World | China | EU | |

|---|---|---|---|---|---|

| 2000 | 0.165 | 0.153 | 0.152 | 0.244 | 0.106 |

| 2001 | 0.161 | 0.148 | 0.149 | 0.233 | 0.106 |

| 2002 | 0.16 | 0.147 | 0.149 | 0.228 | 0.105 |

| 2003 | 0.156 | 0.14 | 0.148 | 0.237 | 0.106 |

| 2004 | 0.153 | 0.137 | 0.147 | 0.244 | 0.105 |

| 2005 | 0.149 | 0.132 | 0.145 | 0.242 | 0.103 |

| 2006 | 0.143 | 0.129 | 0.142 | 0.235 | 0.1 |

| 2007 | 0.143 | 0.127 | 0.138 | 0.222 | 0.095 |

| 2008 | 0.139 | 0.13 | 0.136 | 0.209 | 0.094 |

| 2009 | 0.136 | 0.131 | 0.136 | 0.204 | 0.093 |

| 2010 | 0.136 | 0.128 | 0.136 | 0.204 | 0.095 |

| 2011 | 0.132 | 0.126 | 0.133 | 0.2 | 0.09 |

| 2012 | 0.127 | 0.126 | 0.131 | 0.192 | 0.09 |

| 2013 | 0.127 | 0.122 | 0.128 | 0.184 | 0.089 |

| 2014 | 0.125 | 0.121 | 0.126 | 0.176 | 0.084 |

| 2015 | 0.12 | 0.115 | 0.122 | 0.165 | 0.083 |

| 2016 | 0.117 | 0.108 | 0.119 | 0.154 | 0.082 |

| 2017 | 0.114 | 0.106 | 0.117 | 0.15 | 0.081 |

| 2018 | 0.113 | 0.105 | 0.116 | 0.147 | 0.078 |

| 2019 | 0.111 | 0.101 | 0.114 | 0.145 | 0.076 |

| 2020 | 0.106 | -- | -- | -- | -- |

The Environment

The Environment

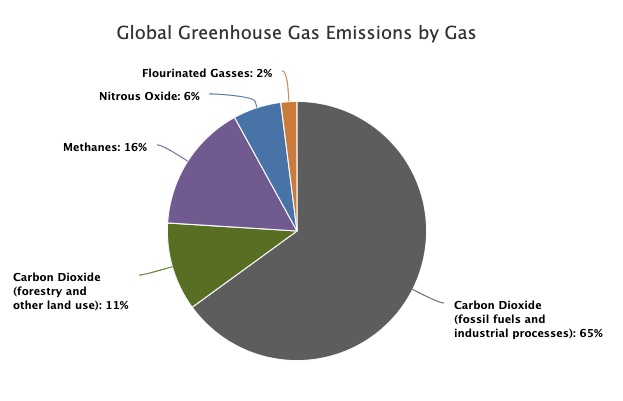

The constituents of global greenhouse gas emissions (those gasses which contribute to global warming) can be seen below:

| Source | 2010 Data |

|---|---|

| Carbon Dioxide (fossil fuels and industrial processes) | 65% |

| Carbon Dioxide (forestry and other land use) | 11% |

| Methane | 16% |

| Nitrous Oxide | 6% |

| Flourinated Gasses | 2% |

To see just how much the energy sector contributes to these emissions, please see below.

Note: The pie chart below is interactive. You can click on sectors of the chart to see the proportions within a sector or category.

| Sector | Category | Industry Sub-sector | Emissions % |

|---|---|---|---|

| Energy (73.2%) |

Used in Industry (24.2%) |

Other | 10.6 |

| Iron and Steel | 7.2 | ||

| Chemical and Petrochemical | 3.6 | ||

| Food and Tobacco | 1.0 | ||

| Non Ferrous | 0.7 | ||

| Paper Pulp | 0.6 | ||

| Machinery | 0.5 | ||

| Used in Buildings (17.5%) |

Residential | 10.9 | |

| Commercial | 6.6 | ||

| Transportation (16.2%) |

Road | 11.9 | |

| Aviation | 1.9 | ||

| Shipping | 1.7 | ||

| Rail | 0.4 | ||

| Pipeline | 0.3 | ||

| Unallocated Fuel Combustion | 7.8 | ||

| Fugitive Emissions | 5.8 | ||

| Agriculture & Fishing | 1.7 | ||

| Agriculture (18.4%) |

Livestock and Manure | 5.8 | |

| Agricultural Soils | 4.1 | ||

| Crop Burning | 3.5 | ||

| Deforestation | 2.2 | ||

| Cropland | 1.4 | ||

| Rice Cultivation | 1.3 | ||

| Grassland | 0.1 | ||

| Industry (5.2%) |

Cement | 3.0 | |

| Chemicals | 2.2 | ||

| Waste (3.2%) |

Landfills | 1.9 | |

| Wastewater | 1.3 |

Hopefully, it becomes apparent that to address the threat of global warming, addressing the greenhouse gas intensity of the energy sector is paramount. In order to address this intensity, we must understand how the markets for energy work. This understanding helps us to make better decisions as we are confronted with choices to make in our professions.

The World

The World

Energy dependence drives geopolitics in a significant way. Many (if not most) of the world conflicts since the early 1980s either have been directly caused by a scramble for energy or had significant energy undertones. The flashpoints for global conflict include Iraq, Iran, Libya, Syria, Ukraine, Russia, Nigeria, and Venezuela. Each of these nations has significant energy wealth. The recent and deadly conflict in Ukraine has significant energy implications. The Donbas region is a part of Ukraine with large oil and gas reserves. This potential supply, when interwoven with greater integration of Ukraine’s economy, represents a threat to Russia’s position as the EU’s energy supplier. Please read the article "6 Ways Russia's Invasion of Ukraine Has Reshaped the Energy World" for a view into the conflict and the effect on energy throughout the world.

There are many other examples of how energy and its uninterrupted supply influence geopolitics and national security strategy. These include a huge and continuous US Navy presence in the Persian Gulf and the Mediterranean Sea as well as the long-term contracting of China for Petroleum products. Please read the article called "BP Signs Long-Term LNG Contract with China's Shenzhen Energy."

Energy Transition in Historical Context

Energy Transition in Historical Context

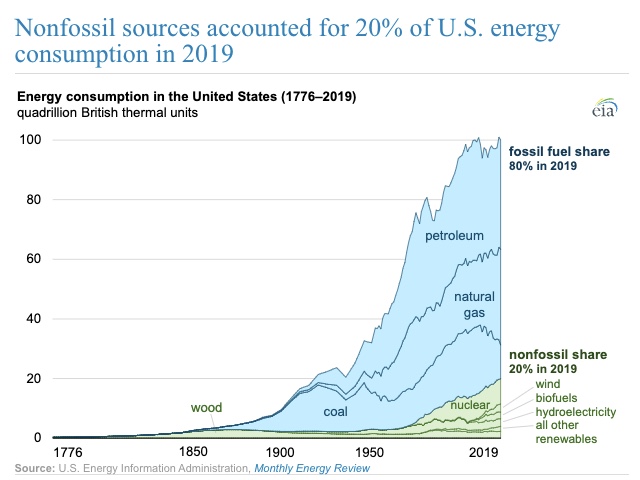

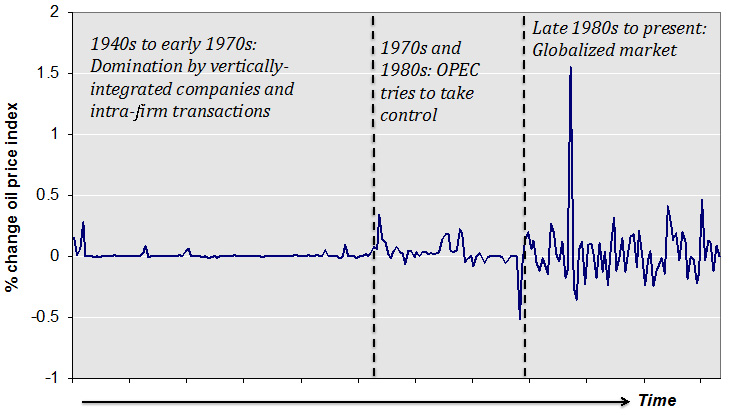

The current energy transition away from fossil fuels is dramatic until it is placed in a historical context. Let’s look at the graph below for a great visualization:

Make sure to read the following articles, which are all listed on the Lesson 1 Overview page:

- "Nonfossil Sources Accounted for 20% of US Energy Consumption in 2019"

- "Peaking: A Breif History of Slelect Energy Transitions"

- "The 200-year History of Mandkind's Energy Transitions"

Sources and Sinks (Or Supply and Demand)

Sources and Sinks (Or Supply and Demand)

Definitions:

- Source - a place, person, or thing from which something comes or can be obtained.

- Sink - a fixed basin.

- Supply - a stock of a resource from which a person or place can be provided with the necessary amount of that resource.

- Demand - the desire of purchasers, consumers, clients, employers, etc., for a particular commodity, service, or other item.

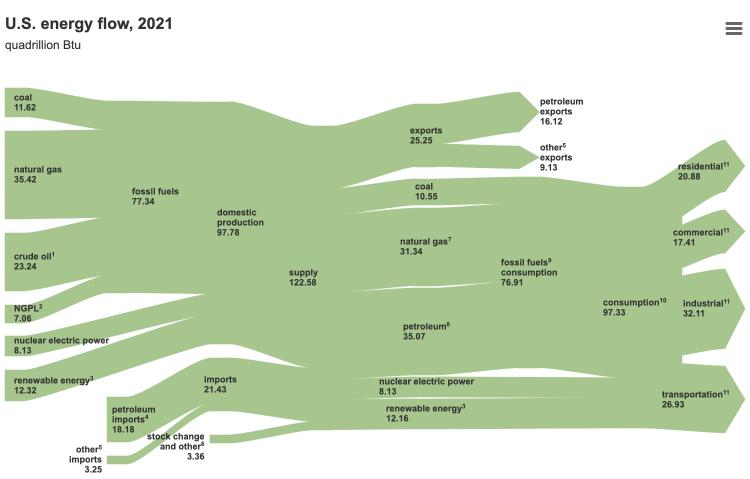

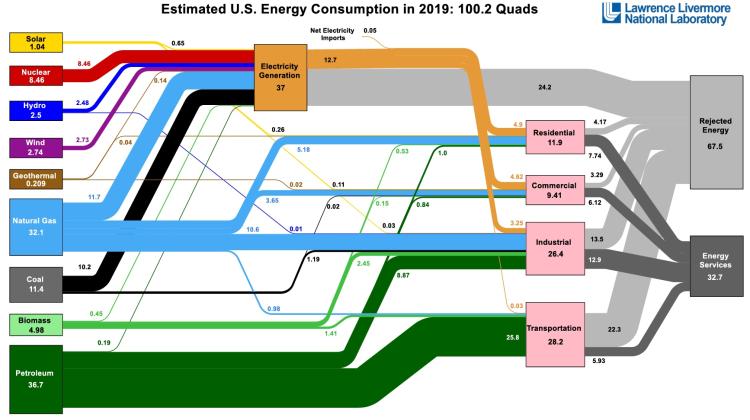

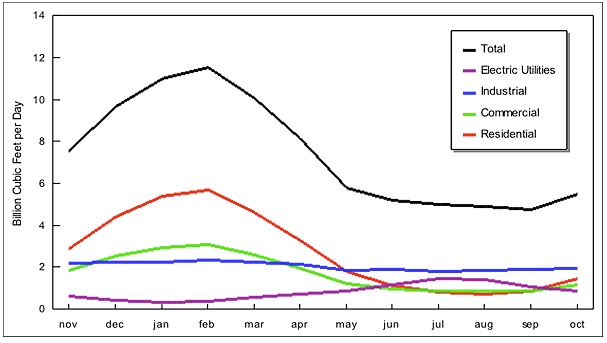

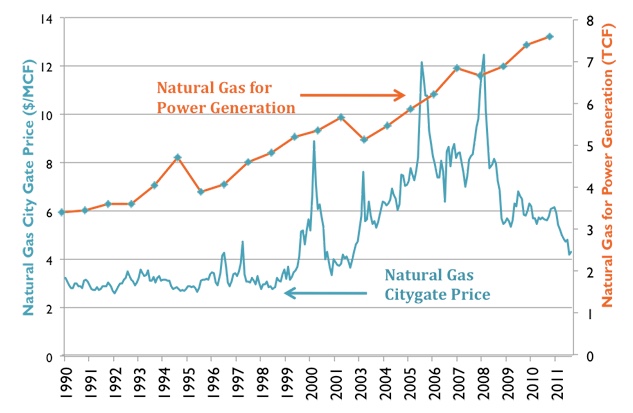

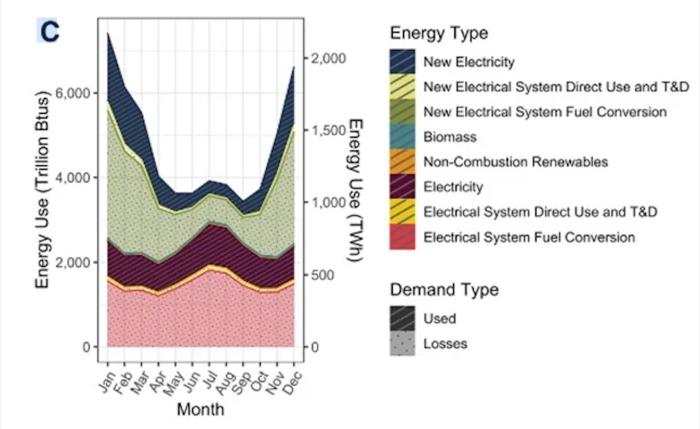

Please see the figures below to visualize the various sources and sinks of energy in the US economy. Both of these figures show the original source of energy, whether it be direct radiation from the sun, from combustion of natural gas, nuclear fission, or some other source. From there, we see how the sources "move" through the various conversion systems to provide energy services to the various sectors like transportation or residences (sinks):

One of the most interesting sinks in the US economy is rejected energy. Rejected energy is that which does not provide useful work. Rejection is usually in the form of heat, but also in the form of transportation losses. What does this imply for the potential to decarbonize?

We discuss sources and sinks here to illustrate the level of energy consumption across the US and also how complex the overall market is.

We will discuss supply and demand in greater detail toward the end of the course. Understanding how these forces interact in the present as well as how they will interact in the future will help inform all of the decisions we make in our professions.

Global Stakeholder Identification

Global Stakeholder Identification

What is the definition of a stakeholder?

A stakeholder is defined as, "a person or entity with an interest or concern in something.” Further elucidation might say “Individuals and organizations who are actively involved in the enterprise, or whose interests may be positively or negatively affected as a result of the enterprise.” An addition to this definition would also include those who believe their interest may be affected by the enterprise. This is a very useful definition as it allows us to cast a very wide net to make sure that all the people and institutions that will be affected by our decisions are considered.

On a global scale this is clearly a very “wide net.” It includes the entire earth and many different people and entities. And many entities will have more than one stakeholder identification. Below are some obvious energy stakeholders in the global market:

- Producers

- Consumers

- Local, State, and National Government

- Transporters of Energy

- Marketers

- Citizens

Please see if you can double or even triple the number of stakeholders globally as you consider the energy market.

Lesson 1 Summary and Final Tasks

Lesson 1 Summary and Final Tasks

Lesson Summary

Hopefully in this lesson you learned how energy decision makers and stakeholders look at development of new projects and technologies. We defined the motivation for the entire EME 801 Course. We also hopefully allowed you to be able to take a holistic view of energy markets and place them in a broader context to understand how various players will act in various situations. By training you to ask these questions, you are becoming able to participate in and even help drive the energy transition.

Reminder - Complete all of the Lesson 1 tasks!

Please double check the What is Due for Lesson 1? list on the first page of this lesson to make sure you have completed all of the activities listed there before you begin Lesson 2.

Lesson 2 - Project Pre-Research

The links below provide an outline of the material for this lesson. Be sure to carefully read through the entire lesson before returning to Canvas to submit your assignments.

Lesson 2 Overview

Lesson 2 Overview

Overview

This Module introduces the Final Project in full detail. You will learn what is required to complete the project and place it in the context of the global energy market. The learning objectives of the course include not just exposure to the structure and performance of energy markets, but most importantly, the determination of what the structure and performance of these markets mean for the business in which you might be engaged. To create this “laboratory” where you can test out you hypothesis of viability, we need to create a “project” against which we can test certain assumptions. For this purpose, we will spend a week understanding just what is expected for the project as well as identifying some of the major parameters of the project.

Learning Outcomes

By the end of this lesson, you should be able to:

- Discuss elements of the semester project: clients, local, project charters, stakeholder, costs and benefits

- Investigate ideas for the client, locale, and major stakeholders for your project

- Examine the roles of the client, locale, and major stakeholders for the project

- Explain the importance of a project charter

- Explain the importance of a stakeholder register

- Consider the other costs and benefits of the project besides simply the financial

Reading Materials

- Brown, A. S. (2005). The charter: Selling your project [20]. Paper presented at PMI Global Congress 2005

- Murali, L. (2023). What is Stakeholder Analysis? [21]

- Project Management Academy (2021). What is a Stakeholder Register? [22]

What is due for Lesson 2?

This lesson will take us one week to complete. Please refer to the Course Calendar for specific due dates. Specific directions for the assignment below are in the Lesson modules in Canvas.

- Complete the assigned readings and viewings for Lesson 2

- Participate in the Zoom discussion

- Complete Quiz 2

- Project work: preliminary Project Charter (due at the end of Lesson 3)

- Project work: preliminary Stakeholder Register (due at the end of Lesson 3)

Questions?

If you have any questions, please post them to our Questions? discussion forum (not email). I will not be reviewing these. I encourage you to work as a cohort in that space. If you do require assistance, please reach out to me directly after you have worked with your cohort --- I am always happy to get on a one-on-one call, or even better, with a group of you.

The Project, Specifically (Why so early?)

The Project, Specifically (Why so early?)

This course offers students a broad introduction to project decision-making in the energy industries, and the impacts that markets, institutions and regulations have on investments and decisions made by firms in the energy industries. Topics to be covered include a discussion of market structures for natural gas, crude-oil, transportation fuels and electric power; basic project evaluation and decision-making for energy projects; and the impacts of major environmental regulations at the state and federal level of the functioning of energy markets and energy project decision-making. - Directly from the course catalog.

As this course is part of a Master's Degree in professional Studies, real-world application of the concepts in the course is paramount to the design of this course. Consequently, we introduce the project in the first few weeks of the course so that the student can immediately begin to apply the course content presented in the following modules to a real-world problem, which the student hopefully either finds applicable to his current or future profession or just particularly interesting. Either way (or potentially both) the student will be learning the content with an eye to how the content about market structure and financial principles may have on the chosen project. The intent of the project is to allow you to define an interesting or important problem and learn how the dynamic regulatory and market landscape will affect outcomes. You will not be penalized for taking on too grand or too small a project. You will learn, however, as you go through the project, that you may have chosen too grand or too small a project... but, that is important learning, too. The design of the course and the intent of the instructor are to allow for corrections should this become a problem. Please understand that you are being granted some serious agency, here, and that should help you gain control of your own learning and success. But also understand that the cohort and your instructor are available to help should the lack of "guardrails" be more of a hindrance than a help. The energy transition is very dynamic at this point in time. Therefore, the opportunity to generate a dynamic project without overwhelming constraints most closely reflects the industry that we are studying.

The project will be defined as such:

Investigate an energy project that you as a developer or corporate decision maker would like to construct or replace. This investigation will include the development of a full financial model to inform investment decisions as well as test various scenarios for the project’s development. We will also develop a full stakeholder register and a review of how governmental policy would affect your project. The project will be worth 700 points of your grade. They will be divided scored as shown below:

| Task | Points | |

|---|---|---|

| 1 | Project Charter and Stakeholder Register | 75 |

| 2 | Development of Revenue Time Series | 50 |

| 3 | Development of Operating Expense Time Series | 50 |

| 4 | Development of Capital Costs | 50 |

| 5 | Development of Financial Metrics | 50 |

| 6 | Program Excel Financial Model | 50 |

| 7 | Development of Risk Management Measures | 50 |

| 8 | Development of Policy Sensitivities | 50 |

| 9 | Development of Electricity Market Sensitivities | 50 |

| 10 | Development of Fossil Fuel Market Sensitivities | 50 |

| 11 | Non-Financial Costs and Benefits | 75 |

| 12 | Final Presentation of Results | 100 |

| Total | -- | 700 |

Each of the tasks will require some quantification to ultimately assess the financial value of your project, but there will also be a required write-up of the derivation of your values. Instructions will be provided in each module on what is required.

The Client and Locale

The Client and Locale

Client: Someone who pays for goods or services.

Locale: A place where something happens.

In the case of your project, you will be required to identify exactly who is going to pay for the energy that your project is going to produce. This “who” can be many different people or entities, but determining this upfront will be very important. For example, we could have a client be a residential customer installing a solar rooftop, a large commercial building upgrading its HVAC system, a big box retail customer wanting to “green up” its energy purchases, a large manufacturer who wants to install a combined heat and power system or a government agency who wants to take advantage of latent energy in its wastewater system to provide heat to district energy. The possibilities are really quite broad for defining your client.

Client definition is important though because the client will be the entity paying for the energy that is generated (saved) from your project. This may be different than the actual end-user of the energy and it is important to make sure that the client’s requirements are kept utmost in mind for the greatest success of the project.

The course is designed so that the student can be very creative with respect to client selection. There will not be specific prohibitions on client selection, however, the knowledge base of the evaluator is limited and the further the student strays from conventional technologies as well as political and economic regimes that are familiar, the more difficult will it be for there to be accurate feedback and evaluation. Innovation is encouraged, but please be ready to defend and justify your assumptions and parameters.

The locale is the “where.” But the “where” has many characteristics. For example:

- The geography

- The demography

- The locale economy

- The local, state and federal policy, government and attitude toward energy projects

- The general suypply chain including labor, transportation availability of energy

- The local and regional energy market and infrastructure

This is not an extensive list, but gives one a general idea of what will need to be researched to develop your project.

What is a Project Charter?

What is a Project Charter?

A project charter is a formal, typically short document that describes your project in a general fashion — including what the objectives are, how it will be carried out, and who the stakeholders are. It is a crucial ingredient in planning the project because it is used throughout the project lifecycle and is a living breathing document that may change from start to finish. It provides a very simple guideline to which the project manager and team members can refer for clear guidance when reaching or (not reaching) milestones. It can be modified as the project evolves, but will always provide the direction to ensure that the project does not drift. Typical elements of the project charter will include the following elements:

- Reasons for the project

- Objectives and constraints of the project

- The main stakeholders

- Risks identified

- Benefits of the project

- General overview of the budget

As we will be going into a great deal of detail on many of these elements as that is the point of the course, the only elements that the Project Charter that must be included for now are:

- Reasons for the project

- The potential benefits of the project

- The locale

- The client

- The stakeholders

- The technology

In Canvas, please take a look at the example project charter and stakeholder register submitted by your peers in a previous semester. They provide great examples for how to think about this.

Stakeholders

Stakeholders

Stakeholder: A person with an interest or concern in something, especially a busines.

So this is the rest of the “who” after we define the client.

According to the Project Management Institute, project stakeholders are defined as: “Individuals and organizations who are actively involved in the project, or whose interests may be positively or negatively affected as a result of project execution or successful project completion.” An addition to this definition would also include those who believe their interest may be affected by the project. This is a very useful definition as it allows us to cast a very wide net to make sure that all the people and institutions that will be affected by our project are considered.

Stakeholder Identification Process:

While the stakeholder identification process is begun and hopefully very close to completed at the beginning of project development, it is an on-going process with certain stakeholders leaving and potentially appearing as the project evolves. It is therefore very important to be flexible and alert for the disappearance and emergence of stakeholder as the project develops. The specific steps we can take in the Identification Process are:

- Review the project documents

- Brainstorm with your project team

- Communicate

- Develop and maintain a stakeholder register

Let’s take a look at each of the steps to define what we must do to complete these steps:

- Review the Project Documents – There will be certain project documents that can be reviewed to help identify stakeholders. In particular, the project charter and previous Stakeholder registers will be of particular assistance. Your project charter will have identified technology, locale, and size of the project. This should help you think about who the stakeholders will be. Previous Stakeholder Registers will allow the project manager to avoid “re-inventing the wheel.” Other documents which will be important are the various government regulations governing solar development, utility and ISO tariffs, labor standards, impact assessment studies from non -governmental organizations and solar industry standards.

- Brainstorm with your project team - The project team in this case will be your cohort in this course, but if you were working for a solar development company, there would be a project team which would provide you with assistance here.

- Communicate – As stakeholders are identified and brought into the project appropriately, they may also help to identify others who will be affected or need to be consulted.

- Develop and Maintain a Stakeholder Register - This document will allow for documentation of the Stakeholder Management process. Typical information about the stakeholders which will be included in the Stakeholder Register are:

- Name

- Contact information

- Title

- Role on Project

- Interests

- Expectations

- Influence

- Impact

- Internal/External

A full development of the stakeholder register along with the actual information for your locale, technology and client for your project will be required as a completion requirement for the final Project write-up.

Let us now take a look at who the most likely stakeholders for a solar project might be:

- The Client

- The Developer (you)

- The Lender(s)

- The local authorities (we'll get further into this in the policy section

- The State

- The Federal Government

- Labor

- Local non-governmental groups

- State and national non-governmental groups

- Local Politicians

- Local Businesses

- Elements in the non-labor supply chain

See if you can increase the list. Remember to appropriately de-risk the project, the identification of, and communication with, the important stakeholders is paramount from the very beginning.

Certain Stakeholder Roles May Include:

The Developer (you)

The developer is responsible to devise and manage the whole process from ideation to delivery of the project and in many cases through portions of the active life of the project.

The Lender

The lender provides capital dollars to the project. The lender will require statements of satisfactory financial viability ( a Pro Forma among other documents) as well as proof of other approvals (environmental, utility, zoning…)

Authorities at Various Levels

Each of these may have to weigh in or grant certain approvals.

Labor

There are some different facets to the labor question. Making sure that there is appropriate human resources to complete the specific project is important, but there may also be certain areas of backlash as other workers could be displaced by solar energy. There is a significant push to ensure that re-training of energy workers occurs to ensure a renewable energy workforce that is fit for purpose.

Local Non-governmental Groups

There may be local interest groups who are very strong supporters of sustainable development for instance; there may also be local interest groups opposed to your development for reasons such as clashing with the natural landscape.

State and National Non-Governmental Groups, Local Politicians, and Local Businesses

Similar to the local groups support and opposition can be found for solar development which could impact your project.

Elements in the Non-Labor Supply Chain

Supply chain issues are much more prevalent with the current level of solar penetration than they have ever been. Special care to treat these very important stakeholders well will mitigate significant risks in delivery of the project.

See if you can develop these roles further in your own thinking as well as identify more as we work through the course. Please take a look at the sample stakeholder register that has been submitted by a student in AE 878 that is a great example of how to think about this:

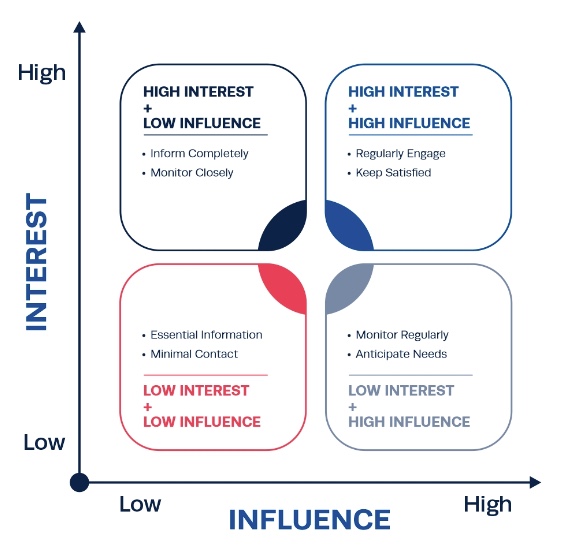

A good way to think about stakeholder management in particular is to look at the following image:

| Low Influence | High Influence | |

|---|---|---|

| High Interest | Inform completely Monitor closely |

Regularly engage Keep satisfied |

| Low Interest | Essential information Minimal contact |

Monitor regularly Anticipate needs |

Here we see the intersection of interest and influence. As we work through the course, see if you can think of the stakeholders that will be introduced and how you might manage them. While this is not a course in project management, we are learning about energy markets through the lens of developing a project.

Other Costs and Benefits

Other Costs and Benefits

Here we want to be sure that as we talk about energy markets we become aware that there may be benefits of energy development outside of the localized strict financial analysis There may be incremental jobs as a result of the development. There could be reduction in carbon footprint as a result of the project. There could be many others. There may also be costs associated with the project that are not captured in the strict localized financial analysis such as increased carbon, flicker from a wind turbine, or the fouling of the view through a solar development.

Lesson 2 Summary and Final Tasks

Lesson 2 Summary and Final Tasks

This Module introduced the Final Project in full detail. We hope you learned what is required to complete the project and place it in the context of the global energy market. The learning objectives of the course include not just exposure to the structure and performance of energy markets, but most importantly, the determination of what the structure and performance of these markets mean for the business in which you might be engaged. To create this “laboratory” where you can test out you hypothesis of viability, we are creating a “project” against which we can test your assumptions. For this purpose, we spent this week understanding just what is expected for the project as well as identifying some of the major parameters of the project.

Reminder - Complete all of the Lesson 2 tasks!

You have reached the end of Lesson 2! Double-check the What is Due for Lesson 2? list on the first page of this lesson to make sure you have completed all of the activities listed there before you begin Lesson 3.

Lesson 3 - Basic Accounting and Corporate Finance

The links below provide an outline of the material for this lesson. Be sure to carefully read through the entire lesson before returning to Canvas to submit your assignments.

Lesson 3 Overview

Lesson 3 Overview

Overview

Steve Jobs and Bill Gates started their respective companies (Apple and Microsoft) in their garages. That makes for a nice story, but how exactly did these companies go from garage-band material to global behemoths? They had to raise money or "capital" somehow - there was only so far that Steve Jobs' own bank account (or his parents' bank account) was going to take him. Eventually, both Jobs and Gates needed to seek additional capital from various sorts of investors to help their companies grow - there is, after all, an old saying that it "takes money to make money." True enough; in this lesson, we will take a bit of a closer look at the process of raising capital, from venture capital to stocks to bonds. The world of corporate finance can get very murky very fast; and, in some ways, it's more of a legal practice than a business practice. Our focus is going to be on understanding the various mechanisms that are used to finance energy projects and the implications of those funding mechanisms on overall project costs.

Where we will ultimately wind up is at this mysterious quantity called the "discount rate." Where does that come from? When we are looking at social decisions that involve common costs and benefits, the discount rate is usually more of a matter of debate than anything else. But when a business decision is involved (and that business is a for-profit entity), then there is a rhyme and reason behind the determination of the discount rate as the "opportunity cost" of its investors. There are many different types of investors in a typical firm or project, all of whom face different opportunity costs, so we will encapsulate these in a single number called the "weighted average cost of capital" (WACC). The WACC turns out to be the correct discount rate for a company or a project.

Finally, most of the material that we will develop in this lesson is targeted toward for-profit companies making investments that are expected to earn some sort of positive return over a relevant time horizon. We won't talk much about the non-profit sector except at the very end, when we discuss how the deregulation of commodity markets has changed the investment game for many for-profit firms, but not necessarily for publicly-owned or cooperative firms. If you are interested specifically in project finance concepts for non-profit firms, the Non-profit Finance Fund [24] has some good resources available.

Learning Outcomes

By the end of this lesson, you should be able to:

- Discuss topics related to Basic Accounting and Corporate Finance

- Explain the fundamental difference between debt and equity financing

- Identify different types of equity investors

- Calculate the cost of debt and equity financing for a single company or project

- Using the cost of debt and equity financing, calculate the weighted average cost of capital

- Determine the client, locale, and major stakeholders for your project

- Develop a preliminary Project Charter and Stakeholder Register for your project

Reading Materials

We will draw on sections from several readings. In particular, there are a number of good online tutorials on the weighted average cost of capital. If you want to get deeper into this subject, there is no substitute for a good textbook on corporate finance. The all-time classic is Principles of Corporate Finance by Brealy, Myers, and Allen (672 pp., McGraw Hill). This book has gone through a number of editions, so earlier editions are probably available online for relatively little cost.

Please watch the following video interview with Elise Zoli (43:45). If this video is slow to load here on this page, you can always access it and all course videos in the Media Gallery in Canvas.

ELISE: Sure. But first, I'm going to start by saying it's an honor and pleasure to be here. And there's no one that I'd rather talk about this stuff with than you. Thank you. My background is I'm a lawyer, I am middle aged, have worked in law exclusively in the sustainability space for more than two decades. I went back to school, that's a family thing, and I also have my MBA.

MARK: Great, actually, so, how did you end up choosing the law as a profession?

ELISE: For me, when I started practicing law, there were a number of new laws that were transformational about how we approach our economy and our collective prosperity. And I thought that these new laws were going to alter the way that we decide to move money in the world. And I really believed that if we were going to design a better economic structure worldwide, that we all had to participate and show up and figure out how to do that right... Right. So I was a big fan of these new ways of thinking of integrating sustainability, of integrating carbon and new currencies and integrating diversity and equity. And I'm a small woman, middle aged, Italian. I could see that if we were going to have a more prosperous, more fair economy, that we had to do things differently. And I was excited to be part of the team thinking about that. Right. Great. You're at Wilson Sonsini now, what are your current duties there? Yeah. Wilson Sonsini is somewhat of a unique law firm. It was created for the new economy. We help entrepreneurs, founders and innovators make the great leap forward. And it was a natural place for me to be... because we work shoulder to shoulder with people who are thinking about how to design the new world. And that's the form of service and support I like to provide.

MARK: Tell us about some of the kinds of projects you work on for WS.

ELISE: Yeah, I'm a little bit of a different critter. Like I said, I'm granola, crunchy. I've only worked in the sustainability space, but I have worked in forms of clean energy that maybe are broader than many people think of them. I've worked in Fission. I've worked in Fusion. I've worked in regenerative farming. I've worked in advanced forms of fuels. Okay. Particularly hydrogen. And I've worked in ways of thinking about how we structure waste and water more than the average bear. And so I think I view energy in the real physics sense of the term, right? Any caloric flux is really important to me. And I'm going to encourage you and your students in this conversation to think broadly about what we mean by energy. Right. And I think that's what's good for their careers.

MARK: Right. Well, it's interesting because one of the things that we show at the very beginning of, of the course is a, a graph that shows 67% of the energy being rejected. And that's something that's near and dear to both of us, really appreciate you bringing that up and how the caloric flux, that's a great way to talk about it.

ELISE: Exactly Right. And I think that I'm going to talk about, in very particular terms, what I do, which is principally to move money. But I think it's really important that we start to get people to think differently about energy and how it's used. And my daughter is 12, my son is 19, and one of the things you can see in their eyes and the way they behave and the way they think about the world is that my daughter grew up with plug-ins, hybrid vehicles and electric vehicles as the norm. And my son grew up with internal combustion engines. For my daughter, she really doesn't understand why people would want to leave their house to fuel their cars. She doesn't understand why public infrastructure is necessary. That all seems illogical to her, right, because she's used to this intrinsic convenience of why would you leave your home to go fuel your vehicle to come back home. That way of thinking she doesn't understand. And one of the things that I really encourage people to do is as they're thinking about the future, try not to see it so much with the constraints we currently have, but really see it in the way that you can help be part of that innovation team that's designing for something different. Right. That means, I think necessarily seeing the things that Bill Gates and Stephen Jobs and all of these extraordinary people who looked at the way that we shared information and said oh, we can do this better and faster. Right. We have to do that with energy. If we do that with energy, I think we're all going to be exactly where we want to be.

MARK: All right. That's great stuff. Tell us a little bit about the work you do for the Stimpson Center.

ELISE: At the Stimson Center, I'm on the board of directors. I'm always going to encourage people to think about participating in the not for profit side of the world, as well as the for profit as we build this new economy. You're going to see there's a blending of those. Heat is a perfect example, Right. Under the new EPA program to allocate $27,000,000,000 to national not for profits to be able to drive the finance of the new economy, right? There's this belief structure that not for profits should be the recipient of these funds. That's not the way we thought about things a decade ago. Right. No one stood up and said, yeah, let's give our money to not for profits or NGOs or to states. But what we've learned based on great data is that allocation of federal and state capital makes a huge difference. So getting a DOE grant makes a huge difference to your future. And that non dilutive capital, that capital that doesn't have to be repaid in the same way as a traditional loan or traditional equity investment would have to be paid, is transformational of how companies and how entrepreneurs advance and succeed. The Stimson Center is a not for profit, nonpartisan think tank that focuses on advancing a safer, more peaceful, more prosperous future. I'm part of the Stimson Center and a co founder of the Alliance for a Climate Resilient Earth. Which is designed to allow some of the leading institutions on earth, the national labs, universities, and corporations, to come together and talk about strategies for advancing a sustainable supply chain, better technology and ESG thinking into their systems and structures. We do that because we believe that convening makes a difference. We believe that if you don't give people a place to talk about how they're working together, you don't spread the ideas as fast as you otherwise could. That's my reason for being at the Stimson Center. The underpinnings of that and my decision to participate at the Stimson Center came from work that's being done at Brookings. I'm going to encourage everyone if they haven't, some of my favorite science out there, If you haven't looked at the geography of prosperity, the Hamilton project at Brookings, I'm going to encourage you to go there and take a look at what, and how we build prosperous communities. That places that you'd want to live and that your students want to live and raise their children, rear their children. And I think it's really important to see what the fundamental underpinnings of prosperity are. And they're education, innovation, diversity. That's an oversimplification, but only slightly, and that's where we need to get to.

MARK: Right. Great, thank you. So take it down a little bit to the blood and guts of this. Can you give students a glimpse into how a simple structured finance transaction might work?

ELISE: Yeah, so let's talk about capital stacks, right? And just money, how does money help you advance? Ten years ago and 20 years ago, if you were a new company, you went out and got equity. And you built your first things and then you built your second things and your third things. And hopefully they worked and then maybe you went to a different bank and you got some working capital or line of credit to fund your business. And then you went out to a third bank to get project finance. And that was mostly blended equity and debt, but much larger than what you started with with your venture capital. Today, many of those lines are blurred. They just are, and particularly for clean energy, climate solutions, climate tech, and clean tech companies. It's very rare that they're building a company and then going out and having a project. Usually, the company's success depends on their having a project that works, right? And that's particularly true for fusion and fission, right? If you don't have a reactor, if you don't have a Tokamak, you don't exist. Right now what we've started to do, and some of this is as recent as a year ago, some of it's as recent as seven years ago, is we've started to redesign the capital allocation to better suit the companies, as opposed to forcing the companies to go out and do different things. Right. And now, there are absolutely great groups of investors out there. I'm going to give some examples. They are not meant to be talismenic, they're not meant to say you must look to these entities, But they are examples of enterprises that are investors that are providing the full capital stack in a different way. So today we would tend to have blended finance, where you go out and you get from the same investor some equity for your corporation and then some finance for your first project all at once. Okay. And in Boston, you know, that looks like Creo Syndicate or Spring Lane Capitol. Any number of great investors out there that are really focused on climate solutions and understand you've got to provide both. It often looks like great family offices who are really focused on both allocating the capital but also making sure that the sustainability metrics, the carbon reductions, the emissions reductions, the waste reductions, all of those alternative currencies are all being met as well, Right? Right. So today I think that the thing that if I were an entrepreneur I'd want to know most and best is that you get to design your own capital stack and you're going to fall within the traditional norms of the last 25, 50 years, but you also can cross those norms as an early stage company. Then I'm going to suggest as well that every single early stage company in our space is looking at the capital that's afforded by the federal government. In the United States, under the IRA and BIL, state governments like California, New York, and Illinois. The green banks that are out there. There are 21 green banks in 16 states and NDC. And trying to think about new forms of relationships to be able to move their company forward with aligned capital. Does that help?

MARK: Immensely. That's just great stuff for the students. When Elise says capital stack, we're talking about capital structure for your project. That's important and to the extent that you're doing your project and students are... they do a project, that's the whole course, that this is a very important piece of how it all fits together and what that capital structure looks like. So feel free to not necessarily stay in that debt / equity realm, but you can fuse them as Elise is saying and if you want to, you can even say that the ... the investment tax credit, is a form of capital, If you're so inclined to look at it that way.

ELISE: I absolutely agree with you. I think that LC, the California Systems for Supporting Innovative technologies and projects, the federal systems are critically important and they are forms of capital. And let's just call capital what it is. We're just talking about money here, right? And what we're really talking about is when you build your first project, who is willing to come in and give you money to be able to do it? Right. And if you think about the different spaces, in the nuclear space, that almost can't be done without the Department of Energy. Right. In the solar space, that really shouldn't be done, without, if you're doing it on farms, the USDA. If you're doing it in a traditional industrial context, Department of Energy, or the tax incentive systems. If you're doing it in California without the LCFS system, right? If you start to think about those forms of capital, of money and/ or incentives which are surrogates for capital, then all of a sudden you can start to get to a capital stack, or a money stack that is more than 50 to 70% of what you need to be able to do your first project. If you can do that with government money, which is customarily given to you without an expectation of a return on that investment, then that allows you to build a project with a lower risk of failure, a higher return to your investors seeking returns on investment. And object to, so it should go more easily, better and faster. And in fact my experiences, they do. Right, right. It's, the term we use is leverage, straight, straight, straight, straight, straight and simple. Right? And that's, that allows us to lever things up and get the returns on the equity higher. But I also would say that in this world, it is now reasonable to expect that the private capital is more reasonable in their expectations about a return on investment. So the wealth transfer from the federal government should be in part to the company and in part to their investors. I would not ordinarily want to see 100% of the non dilutive capital that the US government or states are providing, going 100% to investors, right? Because that's too much, right? Right. Agreed. Part of the reason we have project finance is because it should be a lower IRR, right? A lower return on investment, right, than for traditional equity at the venture high risk stage, right? Does that make sense? Or is that too much a level of detail? No, no, that's perfect. This is all great stuff. You mentioned project finance. Why is project finance such an important concept when it comes to funding energy projects? Yeah. Essentially it's the whole ball of wax, right? It is the center of energy projects. And it's because in order to be able to finance projects, we have to come up with both a structure, committed line of capital and a repayment system that allow people to believe that they will, that their money is not being thrown away. It's that simple. And project finance does that by providing a security or a right, a legal right with respect to some of the equipment. Right. That's being constructed. And if you think about solar, for instance, 70% of the typical cost of a solar installation is an equipment that can be repurposed. Right? Right. And so there's downside projection for the investors. Right? And that's why much of the solar agreements that you'll see talk about rights in case the project does not go forward. Right, right. And part of the complexity of project finance and part of the fun of it from our perspective. Is that a lot of what you think about is how you get the electrons out. Get the electrons paid for at the right price. And then how that allows you to return value to your investors, right, while reducing risk. That's the whole ball of wax, right? And when for all of your students that are going to structure these transactions, if you can reduce them to their most simplistic formula, then it becomes much easier to design them to get people aligned and stop. A little bit of the complexity that I think sometimes turns these agreements into something less efficient than they could be.

MARK: Right, right. Exactly. So really appreciate that. Very enlightening I think, so. Do you think there are any policy levers you'd like to see pulled to move along the transition in your mind either at the state level or federal level?

ELISE: You know. So I don't litigate very often, but I have litigated including in the United States Supreme Court in favor of a new economy, and carbon. Accurate calculation of adverse impacts is the way I'd really say it and a way of reducing impacts. Because if innovation means anything, it means doing more with less, right? Right. Not doing less with more. We have hit our ecological limits and less with more is no longer an option, right? And so the question is, how do we have a consensus discussion about what doing more with less looks like? And I'm open to many and different ways of doing that, but while we have the dollar in the United States as our fundamental currency, it seems to me that we should put a price on impacts. Right? Right. So I like all forms of carbon tax. I think they work fairly reasonably. There is something called an internal carbon price and most large corporations actually already do this internally, right? And so there are surrogates that are in place because we don't have, you know, a national mandatory carbon markets, a carbon tax, or another system for assessing, valuing projects, particularly clean energy projects that outperform their peers by having lower impacts. As a consideration. So, from my perspective, I would set up three forms of values. The first, I would have a capacity factor, installed capacity value nationwide. Are you there? Are you reliable? Can you provide power when needed? And I would put a price on that. Secondarily, I would put a price on the reduced impacts associated with your project. Third, while no one talks about this, and it's probably slightly out there, I would put a security framework around the energy resource and what it's providing, so that, to the extent that, it's likely to experience much lower supply chain disruption. To the extent that it can work, um, outside, and readily integrate its electrons within the grid, or sell its fuels into an existing system for transporting fuels. I would put a high premium on that because I think we as a globe, have begun to experience significant disruptions, both extreme weather and now, pandemics and also armed conflicts that we didn't expect. Right. And I think we have to prepare better for all of those. And that means that we have to start thinking in terms of security.

MARK: Yes. And I mean, resiliency of the supply chain is, obviously, I think that's what you're getting at there.

ELISE: Resiliency of the supply chains, ability to be deployed in isolation. Distributed power has an enormously high value, right? Redundancy, which we don't value at all, to me has an enormously high value, and so I think that what Europe has learned. And thankfully with a very warm winter, is that you can't have overdependence on a single source of fuel.

MARK: Very true.

ELISE: Or a single force of electricity. And I think it's time that we got our hands around that. In a more mathematical, I'm a mathematical being, we've got to get our hands around that in a more mathematical way.

MARK: Right. Right, exactly. To talk a little bit about that, maybe even take that conversation a step further, what about the notion of economic justice? Just transition for labor and capital and that kind of thing. I mean, do you think there's maybe maybe a place for a price tag on that as well? I'm just bringing that into the conversation because you got me thinking about that.

ELISE: I'm a huge believer in equity and of valuing equity. Some years ago, my team and I worked on and designed the first human health metric, to my knowledge, that valued clean energy production by showing the avoided morbidity, mortality, lost work days, healthcare costs associated with some forms of electricity. The idea was that if we're going to have a functional, prosperous, integrated society, you can't have disproportionate burden of certain forms of disease. And I think that increasingly that discussion is being rephrased. It's better, as equity. Right? Right. So I absolutely believe that a society that is healthier, on balance, will achieve more, right? For my, MBA, I did a significant amount of work looking at the effects of war on multigenerational effects of conflict. And in particular looked at epigenetics, which is where even though you and I fought in war wars, but our children did not, but nonetheless experience increased rates of depression and related conditions because of the effects that you and I experienced in battle. And if you start to think about epigenetic effects and you start to calculate, and this is a very early field, I don't want to suggest otherwise, and the science may prove out differently. But right now, what we believe is that conflict has a long tail. And what we mean by that is that living under extreme circumstances is... affects not only the generation that experiences it, but follow on generations, right? In material ways. So I believe we have to think about diversity as equity and inclusion, as building a better society. One that is able to participate in a meritocracy. And if you adversely affect some parts of the population disproportionately and significantly, then that has a serious cost directly, but also in a multi-generational way. Right? So I believe that it should affect whether we wage war. I feel like that should affect whether we have disproportionate negative impacts on certain portions of our population, and I believe we should do everything we can to remedy that.

MARK: Excellent, appreciate that. Are there any other markets or conditions that are affecting project finance in a manner that we might not have anticipated three to five years ago? Inflation.

ELISE: Absolutely. Absolutely. I'm going to say some of this is actually closer to home. I think that the shift to electric vehicles is having a significant multiplier effect. Not only on the way we drive and how we perceive that experience, but also how electrified our homes are, where we allocate electrons and what the future looks like. And I think that we, as a group, and I include myself in this, did not fully appreciate how quickly we would accelerate vehicle electrification for light vehicles. I think we have not fully appreciated how quickly we're going to need hydrogen for long haul trucking and heavy vehicles. And transformational... what a transformation that's going to be on the way we fuel things and how we produce fuel. Right? So if you're using electrolyzers that's not 3 mi below the ore surface, right? Right. All of a sudden now you're going to start to see fuel production around you. That's going to be a very different structure.

MARK: Right. Right.

ELISE: Then lastly, I think that we haven't begun to think hard about food production and in particular, you know, carbon capture utilization and sequestration. I think we're in what people refer to as hype cycle one, but version 1.0 you have many CCUS, that's the acronym, technologies, right? And I think that they are going to be extraordinary. Nothing short of extraordinary in the near term, in terms of how we manage for climate, for carbon and emissions, other air emissions. So it would not surprise me if three years from now we find that our ability to produce cementitious materials from CO2 two has transformed the way we think of cement production, right? And therefore, what we think about CO2. And I would not be surprised to find that we go from thinking of CO2 as a waste to be avoided or sequestered to a feedstock to be repurposed and used efficiently. That's what I'm hopeful for, right?

ELISE: Right. So what are the biggest challenges facing your business in today's energy markets?

MARK: I think, you know, we're gladiators. Mark, you know this better than anybody else, right? The difficulties of being in a new space, an emerging space is that often you have to explain yourself, you have to educate, you have to surmount the both lack of awareness, lack of understanding and fear people have about new things. And always remind myself that there must have been a moment, I know there was a moment, right, where the CEO and the Board of Smith Corona, the leading typewriter manufacturer of the '70s, were in a room, and a consultant accountant and a law firm and the boards on the CFO all got in the room and someone said, there are these two little companies. Should we buy Apple? One's called Apple or some fruit. One is called...does the stuff that's used to make apple thing work, and it's called Microsoft, and should we buy them? And the price on those guys was what? Maybe 5 million, ten million dollars, right? And the board of Smith Corona, which had a typewriter in every office and many homes decided on balance, they didn't need to. Right? And that means that somebody sat down in a room and thought about it and said we got auto-correct. Right. And I'm not criticizing in any way, shape or form. I think every single one of us makes those decisions every day. And we have to make fewer of them. And we have to be open and aware of what the possibilities for change are. I think the hardest thing is to get up every morning and think, how do we best advance something really novel and maybe a little scary? Because I work so much in nuclear and fission and fusion, I think it's easy to be more afraid than to be excited. Yeah, I feel for the Wright brothers and I feel for folks redesigning hydrogen pipelines. And the question is, how we come up with, with a nod Amos Tversky, the extraordinary Amos Tversky and Danny Khaneman... and how do we get better about behavioral economics? How do we get better at communicating probabilities to human beings? And how do we make more rational and informed decisions sooner? Right. Together.

MARK: Right. All right, great stuff. So what do you think the major roadblocks are in the transition? At this point?

ELISE: I think, I think it's easy for people to talk about the companies. I don't really see that. I really think it's us. I really do. And I think that we have to, it's so clear when we vote with our dollars and with our preferences, how quickly we can transform things. Going from Ma Bell to an iPhone is a non trivial change in human development, right? And human preferences. I think that we have to get better about working together and being less fearful about the new world. And I don't think we have 30 years to do it. That means that we have to get excited about solar on our roofs and we have to get excited about better use of water in our homes. I think if we could change one thing, I would eliminate planned obsolescence. That I think would be most transformational if we could get a government that says to hell with crap, when you buy something that you plug in, it has to work for a generation, right? It has to be, we can no longer afford white good waste, tire waste, all of those things that are readily fungible, fast fashion, all of that stuff should have a significant tax associated with it. And then, can you imagine you would start to focus on the companies that actually do more with less make better product. I think when people join my team, I make them spend an hour at least with en-Roads. If you haven't done Mit's en-Roads, if you haven't taken the time to simulate and solve climate change for yourself, what you really see when you work in en-Roads is that it is an entire economy contribution. That it's not just about electrons or just about fuels, or just about food, or just about carbon capture utilization and sequestration. And I think if you start to see that, then you start to look around yourself and say, okay, I'm not going to use plastics anymore, okay, I am going to work to reduce my footprint. Okay, I'm going to make sure that I demand better products that are more efficient. And as you start to make those decisions, I think you really start to see amplification around change.

MARK: Right. Right. So what do you think are the most important things our students should take away from this discussion?

ELISE: I think they are at this extraordinary moment, I can liken it only to the 1920s and the beginnings of the automotive and age of flight, the 1970s and late '60s and '70s. And really the publicity around space travel and the beginnings of the computing age, right? We are in this transformational age around our impacts and reducing our impacts in a way that allows us to have more equitable, more functional, longer lived prosperity. And I believe that every person's thought needs to be, to come into play -- if you have a great idea, I don't care whether it's B to B or B to C, business to business or business to consumer. I don't care whether it's in food, fuel, electrons; I don't care whether it's on the policy side, the money side or the technology side. We need every great brain thinking about how to do this better. And I believe in this economy, there is a place for everybody to be able to, on the basis of excellence, advance reasonably, and that there's an open possibility to really change the world around you. And Mark, you know, I don't know where you're from, but like again, I'm a small, middle-aged Italian woman. Right. And I was to some degree, the first in my generation of families to go to private school, to be able to be a lawyer, to go get my MBA, to work in a highly technical field. And I know there's a tendency to focus on barriers...and the best advice that I can give is to the extent that you can... recognizing that economics matter, experience matters, and role models matter. They all matter. And we agree. I'm not trivializing how high the burdens are, but I think if you can every morning, sort of ignore all of the barriers and get up with that belief that the world is your oyster, as they say, I'm vegan, I don't particularly like that metaphor. But if you could say I'm going to look at the world and ignore the barriers that I think... being a little hard-headed, being a little resilient, being a little immune to criticism and limits is the best advice that I can give anybody. And forge ahead.

MARK: Really appreciate that. Anything else you'd like to add, Elise?

MARK: No, I always say the same thing, which is where you and I are always happy to talk with anybody who wants to make their way forward in this space. They can reach out to you, they can Google and find me. Our mission and obligation is to be able to enable the next generation. And that's when I say obligation, I really mean obligation. Folks should treat us as ready, willing, and able to spend the time to talk. We welcome that.

MARK: Yes, exactly. Thank you for that, Elise, and really, really appreciate your time today. Thank you.

ELISE: It's an honor and a pleasure. Be well. Thank you.

- M.G. Morgan, et al., "The U.S. Electric Power Sector and Climate Change Mitigation," Section II (pp. 20-26) [26]

- Business Week, "Debt and Equity Financing" [27] (This is an article that is geared more towards small businesses but has good concise definitions of "debt" and "equity.")

- G. Krellenstien, "Transmission Financing" [28] (This presentation presents a clear and frank look at the world of financing energy projects.)

- A primer on "Project Finance" [29] written by HSBC.

- In Canvas, registered students can access the following: Groobey, C, et al. (2010). Project Finance Primer for Renewable Energy and Clean Tech Projects. Wilson Sonsini Goodrich & Rosati Professional Corp.

What is due for Lesson 3?

This lesson will take us one week to complete. Please refer to the Course Calendar for specific due dates. Specific directions for the assignment below can be found within this lesson.

- Complete all assigned readings and viewings for Lesson 3

- Project work: Preliminary Project Charter

- Project work: Preliminary Stakeholder Register

Questions?

If you have any questions, please post them to our Questions? discussion forum (not email). I will not be reviewing these. I encourage you to work as a cohort in that space. If you do require assistance, please reach out to me directly after you have worked with your cohort --- I am always happy to get on a one-on-one call, or even better, with a group of you.

Financing Investment Projects: An Introduction

Financing Investment Projects: An Introduction

A lot of what we will be studying in this lesson falls under the umbrella of "corporate finance," even though our focus is actually individual energy projects, not necessarily the companies that undertake those projects. Still, there are a number of parallels and many concepts of how companies should finance their various activities are immediately relevant to the analysis of individual projects. After all, like companies as a whole, individual projects have capital, staffing, and other costs that need to be met somehow. And a company can sometimes be viewed as simply a portfolio of project activities. Similarly, an individual project can be viewed as being equivalent to a company with one single activity. (Following deregulation in the 1990s, a number of major energy projects, such as power plants, were actually set up as individual corporate entities under a larger "holding company.") A lot of the emphasis in the corporate finance field is how companies should finance their various activities. (For example, in the readings and external references, you will see a lot of mention of "target" financial structures.) That isn't really our focus - we are more concerned with understanding the various options that might be available to finance project activities. The "right" financing portfolio is ultimately up to the individuals or companies making those project investment decisions.

Project financing options are numerous and sometimes labyrinthine. You may not be surprised that lawyers play an active and necessary role (sometimes the most active role) in structuring financial portfolios for a project or even an entire company. While individual finance instruments span the range of complexities, the basics are not that difficult. For an overview, let's go back to the fundamental accounting identity:

The balance sheet for any company or individual project must obey this simple equation. So, if an individual or company wants to undertake an investment project (i.e., to increase the assets in its portfolio), then it needs some way to pay for these assets. Remembering the fundamental accounting identity, if Assets increase, then some combination of Liabilities and Owner Equity must increase by the same dollar amount. Herein lies the fundamental tenet of all corporate and project finance: financing activities that increase the magnitude of Assets must be undertaken through the encumbrance of more debt (which increases total Liabilities) or through the engagement of project partners with an ownership stake (which increases total Owner Equity).

Hence, all projects must be financed through some combination of "debt" (basically long-term loans by parties with no direct stake in the project other than the desire to be paid back) and "equity" (infusions of capital in exchange for an ownership stake or share in the project's revenues).

The following video introduces debt and equity in a little more detail. The article from Business Week [27], while it goes more into the specifics for small businesses than our purposes require, also has a nice overview of debt and equity concepts.

Video: Debt and Equity Financing (4:51)

MATT ALANIS: Welcome to Alanis Business Academy. I'm Matt Alanis. And this is an introduction to debt and equity financing.

Finance is the function responsible for identifying the firm's best sources of funding, as well as how to best use those funds. These funds allow firms to meet payroll obligations, repay long-term loans, pay taxes, and purchase equipment, among other things. Although many different methods of financing exist, we classify them under two categories, debt financing and equity financing.

To address why firms have two main sources of funding, we have to take a look at the accounting equation. The accounting equation states that assets equal liabilities plus owner's equity. This equation remains constant, because firms look to debt, also known as liabilities, or investor money, also known as owner's equity, to run operation.

Now let's discuss some of the characteristics of debt financing. Debt financing is long-term borrowing provided by non-owners, meaning individuals or other firms that do not have an ownership stake in the company. Debt financing commonly takes the form of taking out loans and selling corporate bonds. For more information on bonds, select the link above to access the video "How Bonds Work." Using debt financing provides several benefits to firms. First, interest payments are tax deductible. Just like the interest on a mortgage loan is tax-deductible for homeowners, firms can reduce their taxable income if they pay interest on loans. Although the deduction doesn't entirely offset the interest payments, it at least lessens the financial impact of raising money through debt financing. Another benefit to debt financing is that firms utilizing this form of financing are not required to publicly disclose of their plans as a condition of funding. This allows firms to maintain some degree of secrecy so that competitors are not made aware of their future plans. The last benefit of debt financing that we'll discuss is that it avoids what is referred to as the dilution of ownership. We'll talk more about the dilution of ownership when we discuss equity financing.

Although debt financing certainly has its advantages, like all things, there are some negative sides to raising money through debt financing. The first disadvantage is that a firm that uses debt financing is committing to make fixed payments, which include interest. This decreases a firm's cash flow. Firms that rely heavily on debt financing can run into cash flow problems that can jeopardize their financial stability. The next disadvantage to debt financing is that loans may come with certain restrictions. These restrictions can include things like collateral, which require a firm to pledge an asset against the loan. If the firm defaults on payments, then the issuer can seize the asset and sell it to recover their investment. Another restriction is what's known as a covenant. Covenants are stipulations, or terms, placed on the loan that the firm must adhere to as a condition of the loan. Covenants can include restrictions on additional funding, as well as restrictions on paying dividends.

Now that we've reviewed the different characteristics of debt financing, let's discuss equity financing. Equity financing involves acquiring funds from owners, who are also known as shareholders. Equity financing commonly involves the issuance of common stock in public and secondary offerings or the use of retained earnings. For information on common stock, select the link above to access the video "Common and Preferred Stock." A benefit of using equity financing is the flexibility that it provides over debt finance. Equity financing does not come with the same collateral and covenants that can be imposed with debt financing. Another benefit to equity financing is that it does not increase a firm's risk of default like debt financing does. A firm that utilizes equity financing does not pay interest. And although many firms pay dividends to their investors, they are under no obligation to do so.

The downside to equity financing is that it produces no tax benefits and dilutes the ownership of existing shareholders. Dilution of ownership means that existing shareholders' percentage of ownership decreases as the firm decides to issue additional shares. For example, let's say that you own 50 shares of ABC Company. And there are 200 shares outstanding. This means that you hold a 25% stake in ABC Company. With such a large percentage of ownership, you certainly have the power to affect decision making. In order to raise additional funding, ABC Company decides to issue 200 additional shares. You still hold the same 50 shares in the company. But now there are 400 shares outstanding, which means you now hold a 12 and 1/2% stake in the company. Thus, your ownership has been diluted due to the issuance of additional shares. A prime example of the dilution of ownership occurred in the mid-2000s when Facebook co-founder Eduardo Saverin had his ownership stake reduced by the issuance of additional shares.

This has been an introduction to debt and equity financing. For access to additional videos on finance, be sure to subscribe to Alanis Business Academy. And also remember to Like and Share this video with your friends. Thanks for watching.

Debt and equity each have costs. The cost of debt is pretty explicit - lenders typically charge interest. The cost of equity is a little more complex since it represents an "opportunity cost." If an equity investor (like a potential holder of stock) buys into Acme PowerGen Amalgamated, that investor is foregoing the returns that it could have earned from some other investment vehicle. The attitude of most investors, in the immortal words of Frank Zappa, is "we're only in it for the money." Those foregone returns represent the opportunity cost of investing in Acme PowerGen Amalgamated. If we weight these costs by the proportion of some project that is financed through debt and equity means, we have a number that is known as the "weighted average cost of capital" or WACC. The general equation for WACC is:

Here, the "costs" are generally in terms of interest rates or rates of return. So, a company facing a 5% annual interest rate would have a "cost of debt" equal to 5% or 0.05. We'll get into these pieces in more depth, and will explain the strange tax term in the WACC equation after we gain more of an understanding of debt and equity, and how the costs of debt and equity might be determined.

Equity Financing

Equity Financing

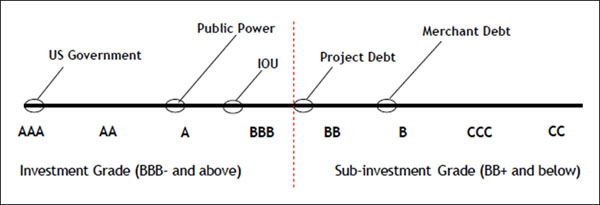

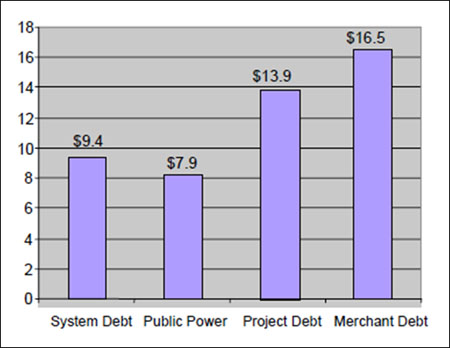

The term "equity" in corporate or project finance jargon indicates some share of ownership in a company or project - i.e., some level of entitlement to some slice of the revenues brought in by the company or project. There are different priority levels of this entitlement - typically operating costs must be paid (including, in some cases borrowing costs) before equity investors can get their slice of the net revenues. There are also multiple priority levels of equity investors, which determines who gets paid first if profits are scarce.