Learner’s Guide to Geospatial Analysis

Test.

Preface

Why this publication?

The WMD Commission concluded that, “the Intelligence Community was dead wrong in almost all of its pre-war judgments about Iraq's weapons of mass destruction” (Iraq Survey Group Final Report: Weapons of Mass Destruction (WMD), September 30, 2004). This failure to appreciate the human-geographic implications of operations in Afghanistan, and to understand the impact of New Orlean's human geography on Katrina rescue efforts raises serious questions about our preparation of those carrying out geospatial analysis or, at least, to inform our leaders of our analysis. As educators, we share in these failures since we educated the failed analyst.

The ultimate goal of this publication is to help the geospatial analyst produce accurate intelligence which saves lives, improves government, serves law enforcement, and helps business. Good geospatial intelligence separates the important from the unimportant and conceptualized a spatial order out of apparent disorder. Such analysis is not innate, and the analysis is subject to many uniquely spatial fallacies, biases, and confusion between cause and effect, technical necessities, group-think, and analyst failings. Even the most experienced geospatial analyst will sometimes fall into one of these pitfalls. The truly good geospatial analyst knows what the pitfalls are and works toward objective and accurate analysis.. Geospatial analysts should be aware of their spatial reasoning processes. Quoting Richards Heuer [1] (p. 31), "they should think about how they make judgments and reach conclusions, not just about the judgments and conclusions themselves."

Academia almost exclusively teaches the scientific method as a method to create knowledge. But, it seems the scientific method is seldom used in geospatial intelligence work. What method or approach is used? The intuitive method seems to be the primary method for producing geospatial intelligence which:

- has the well known tendency to permit biases to influence the analytic result;

- is difficult for other analysts to reproduce; and

- is difficult to teach since the results are based on intuition which comes with experience.

Using the scientific method has it's limitations since the scientific method starts with a single hypothesis. As such, some suggest it is not appropriate for developing intelligence (Heuer, 2009). As Don L. Jewett (2007) points out, the problem with starting with a single hypothesis is the emotional attachment to the hypothesis and the temptation to use the results that contradict a less desired desired hypothesis. Other methodologies exist that provide analytic means to arrive at an accurate analytic result. This is not an attempt to diminish the importance of intuition and experience. Rather it suggested an appropriate mixture of science and intuition as a means to produce good geospatial intelligence.

Chapter 1 - Introduction

Chapter 1 Overview

The Geospatial Intelligence Professional

The Geospatial Intelligence professional is a “knowledge worker” or “symbol analyst” (a term used by the U.S. Department of Labor) who carries out multi-step operations, manipulates abstract symbols, addresses abstract and complex ideas, acquires new information, and must remain mindful enough to recognize change. Successful knowledge work, like all work, requires study and practice. Professionalism in the area calls for broad experience and understanding of the entire Intelligence Community. The individual who is only interested in geospatial technology, as central as it is to the discipline, is not fully a Geospatial Intelligence professional. Nor is the technical expert in GIS or remote sensing ipso facto a Geospatial Intelligence professional.

What is Intelligence and What is Geospatial Intelligence?

What is Intelligence?

In his 2006 book, Intelligence: From Secrets to Policy, Lowenthal (p 8) defines intelligence in three ways:

- Intelligence as a process

A means by which certain types of information are required and requested, collected, analyzed, and disseminated, and as the way in which certain types of covert action are conceived and conducted. - Intelligence as a product

A knowledge product resulting from analyzes and intelligence operations themselves. - Intelligence as an organization

Entities that carry out various functions for Intelligence.

Lowenthal points out that to the average person, intelligence is about secrets and spying. However, according to Lowenthal, this view of intelligence as primarily secrets misses the important point that intelligence is ultimately information about anything that can be known regardless of how it is discovered. More specifically, intelligence is information that meets the needs of a decision maker, and has been collected, processed, narrowed, and offered to meet those needs. This is to say, intelligence can be considered a specific subset of the broader category of information. It also can be said that all intelligence is information but not all information is intelligence. A key point is that Intelligence and the entire intelligence process responds to the needs of the decision makers. Lowenthal also points out that many think of intelligence in terms of government and/or military information. This is certainly a major use of intelligence, but political, business, social, environmental, health, espionage, terrorism, and cultural intelligence also intelligence. Lowenthal states a fundamental that Intelligence is not about truth (Lowenthal, p 6) and it is more accurate to think of intelligence as "proximate reality." Intelligence analysts do their best to arrive at a accurate approximation of what is going on but they can rarely be assured that their best analytic results are true. Therefore, the is "intelligence products that are reliable, unbiased, and free from politicization. In other words, to develop a product that is as close to the truth as it can be humanly possible to discern." (Lowenthal, p 6).

De Jure Definition of Geospatial Intelligence

De jure is a Latin term which means, "by law" which is commonly contrasted to de facto which means, "concerning the fact" or in practice but not necessarily ordained by law. The NIMA Act of 1996 establishing the National Imagery and Mapping Agency and the subsequent amended language in the 2003 Defense Authorization Act as codified in the U.S. Code governs the mission of the National Geospatial-Intelligence Agency (NGA). The de jure definition of Geospatial Intelligence is found in U.S. Code Title 10, §467:

The term "geospatial intelligence" means the exploitation and analysis of imagery and geospatial information to describe, assess, and visually depict physical features and geographically referenced activities on the earth. Geospatial intelligence consists of imagery, imagery intelligence, and geospatial information.

The moniker GEOINT has become associated with geospatial Intelligence with a specific meaning and context. It has often been said that the 2003 renaming of NIMA to NGA recognized the emergence of geospatial information as an intelligence source in its own right, which is termed GEOINT. The term GEOINT connotes a source of intelligence like HUMINT, MASINT, COMINT, ELINT, SIGINT, IMINT. GEOINT is uniquely multi-source in that it integrates and enriches information collected by the other INTs into a spatiotemporal context.

The de jure definition drives us to focus on Geographic Information Systems and digital remote sensing, since these technologies, as a substantial component of workflows such as TPED (Tasking, Processing, Exploitation, and Dissemination), heavily leverage spatial data handling and image processing technologies to transform geospatial data. However, there is a growing recognition that GEOINT “must move from an emphasis on data and analysis to an emphasis on knowledge” (Priorities for GEOINT Research at the National Geospatial-Intelligence Agency, The National Academies Press, 2006, P. 9). Here, the use of the term knowledge means the confident understanding of a subject with the ability to use it for a specific purpose appropriately. This is to say, geospatial knowledge creation involves much more than automated data handling and is a complex cognitive process involving perception, learning, communication, association and reasoning.

De Facto Definition of Geospatial Intelligence

We would like to suggest the following as an emerging definition of Geospatial Intelligence, which might carry the moniker of GeoIntel, as a means to guide the preparation of the geospatial professional:

Geospatial Intelligence is actionable knowledge, a process, and a profession. It is the ability to describe, understand, and interpret so as to anticipate the human impact of an event or action within a spatiotemporal environment. It is also the ability to identify, collect, store, and manipulate data to create geospatial knowledge through critical thinking, geospatial reasoning, and analytical techniques. Finally, it is the ability to ethically collect, develop, and present knowledge in a way that is appropriate to the decision-making environment.

In this definition, Geospatial Intelligence doesn’t just provide the means by which to answer the questions of what?, when?, and where?, but also how?, why?, and what is the significance? Central to this proposed definition is the notion that the best geospatial intelligence resource is an educated analyst and that intelligence is about nothing if not about “out-thinking” your opponent. For all the appropriate emphasis on technologies, methodologies, tools, and infrastructure, people still are the most precious resource.

Intelligence Cycle and Process

Intelligence Analysis in a Cycle

Analysis resides within the larger intelligence cycle. The intelligence cycle determines the daily activities of the Intelligence Community. It starts with the needs of the intelligence "consumers' such as policymakers, military officials, and other decision makers who need intelligence for their activities. These requirements are sorted and prioritized within the Intelligence Community and are used to drive the collection activities of the Intelligence Community. The cycle, as depicted in the figure below, is repeated until an intelligence requirement has been satisfied.

The Intelligence Cycle is a concept that describes the general intelligence process in both a civilian or military intelligence agency or in law enforcement. The cycle is typically represented as a closed path of activities. Problems with a closed loop include an overall process that is no better than its weakest component and stove piping. In the traditional intelligence use of the term, stove piping keeps the output of different collection systems separated from one another. Stove piping prevents one discipline from cross-checking another and bypassing the regular analysis of raw intelligence by sending only raw intelligence that supports a particular position to the leadership.

Analysis is using information about the context of a situation, characterizing the known observables, and applying the appropriate statements of probability to anticipate future situations. Descriptions of the anticipated situations are developed from what may be inaccurate or deliberately deceptive information; therefore, the analyst must correlate the similarities among observations and develop a common "truth." A set of repeatable and useful problem-solving approaches is helpful and essential for analysts. Because of the nature of the intelligence problem and work, an analytic approach must be tolerant of deceptive information far more than the scientist performing an experiment. According to Richards Heuer [1], intelligence analysis involves incremental, iterative, refinement.

Intelligence Process

The term "intelligence process" refers to the steps of the cycle. Intelligence, as practiced in the United States, is commonly thought of as having five steps. Lowenthal (2006, p 55) added two phases for seven phases of the intelligence process as (1) requirements, (2) collection, (3) processing and exploitation, (4) analysis and production, (5) dissemination, (6) consumption, and (7) feedback. The following paraphrases Lowenthal (p 55):

- Requirements.

Identifying requirements means defining those questions to which intelligence is expected to make a contribution. Requirements also means specifying the collection of certain types of intelligence. The impulse is to say that all policy areas have intelligence requirements, which they do. However, since intelligence capabilities are limited, priorities must be set. - Collection.

Once requirements and priorities have been established, the intelligence is collected. Some requirements have specific types of collection; some may require several types of collection. Making these decisions is a key issue and the question of how much can or should be collected to meet each requirement. - Processing and Exploitation.

Collection produces information which must undergo processing and exploitation before it can be regarded as intelligence and given to analysts. Conversion includes translations, decryption, and interpretation. - Analysis and Production.

Analysis and production includes the integration, evaluation, and analysis of all available data, and the preparation of intelligence products, including quickly developed single-source, event-oriented reports and longer term all-source and intelligence studies. "All-source" intelligence analysis is done exclusively by the CIA, DIA, and the State Department's Bureau of Intelligence and Research. All-source analysts complete a more thorough evaluation and assessment of the collected information by integrating the information from other classified and unclassified sources.

Significantly, according to Lowenthal, most discussions of the intelligence process end with the dissemination and the intelligence having reached the policy makers. However, Lowenthal bundles dissemination with consumption and adds feedback:

- Dissemination and Consumption

Dissemination and Consumption are taken together by Lowenthal. The process of dissemination, or the process of moving intelligence from producers to consumers, is largely standardized, with consumption being assumed in the 5-step process. However, Lowenthal points out that policy makers are not pressed into action by the receipt of intelligence, and if and how they consume intelligence is key (Lowenthal p. 62). - Feedback.

A dialog between intelligence consumers and producers should occur before and continue after the intelligence has been received. The analyst should have some sense of how well their intelligence requirements are being met and address any adjustments that need to be made. Feedback assesses the degree to which the finished intelligence addresses the needs of the intelligence consumer and will determine if further collection and analysis is required.

What is Intelligence Analysis?

Puzzles and Mysteries

The following discussion is a paraphrase of the RAND report Assessing the Tradecraft of Intelligence Analysis [3] (Chapter 2, pages 3-12).The intelligence cycle can be contrasted with the intelligence analytic cycle which, according to the RAND report, typically includes three forms of analysis:

- technical processing as a form of analysis

- single discipline analysis such as GEOINT

- all-source analysis

The distinction between the first two types and all-source analysis is being blurred because of this use of tools, such as GIS, to integrate multiple intelligence sources. As such, some suggest a continuum of analysis from the collection system at one end to analysis at the other. Along this continuum, there is a transition where the data is used to support analysis. According to RAND, past this transitional area, analysis splits into:

- puzzle-solving

- mystery-framing

According to RAND:

- A puzzle tests the ingenuity of the solver to use information. Here one pieces together the information pieces in a logical way in order to come up with the solution, sort of like, the overlay process in GIS. Puzzle-solving in GIS involves pulling together many sources of data and information and, using that evidence, identifies new spatial patterns or trends and develops new knowledge.

- Mystery-framing includes political and societal questions related to humans. Anticipating human actions, e.g., where will a terrorist strike next, always involves subjective judgment which is less certain and more prone to biases. The analytic logic is also significant different for mysteries because there is no definitive solution. Mysteries can only be generally framed and made sense of which suggested that the argument is as important as the evidence. In the geospatial realm, information is always lacking because of accuracy, age, detail, or relevance. Therefore, many geospatial intelligence questions are mysteries. Mysteries involving human perceptions benefit from experience.

Is Geospatial Intelligence Art or Science?

It should be no surprise that there are competing views of geospatial analysis. One school is that intuition, experience, and subjective judgment are key. Analysis here is an art, and non-quantitative methods predominate. Another school is that quantitative data and analysis using such tools as GIS are most relevant. Intelligence analysis here is science-like, and quantitative methods as applied in spatial analysis predominate. This controversy somewhat mirrors a long-standing debate in the intelligence community: if good analysis depends largely on subjective, intuitive judgment (an art) or systematic analytic methods (a science). Understanding this question is important to the person when developing an effective approach to geospatial intelligence creation. To help understand these points of view, I will define the terms using the Merriam-Webster Collegiate Dictionary, tenth edition, as:

- Art - the conscious use of skill and creative imagination in the production of anesthetic objects.

- Science - knowledge or a system of knowledge covering general truths or the operation of general laws, especially as obtained and tested through the scientific method.

Interestingly, there are those that consider integrative geospatial data tools, such as those found in GIS, as primarily aids to intuition and experience-based analysis and not the application of quantitative analytic methods. This seems contrary to the technical capabilities GIS brings to the geospatial intelligence. It is correct to say that there is no certain dividing line between art and science. Some contend there is no diving line at all and a pure scientific approach to geospatial analysis is impossible. The dissatisfaction with the push toward a science only perspective in GIS has been seen as a step backward by some. In this thinking, GIS’s models and analysis methods are not rich enough in geographical concepts and understanding to accurately reflect reality.

Geospatial intelligence is geospatial analysis, and geospatial analysis, at its core, is geography. Geography is both the conscious use of creative imagination in the representations of the earth and the science of developing general truths about the earth. For something to be automatable, it must be modeled and the facts (inputs) quantified. Since a model is a simplified abstract view of the complex reality, the model represents a limited set of rules which allows analysts to work out an answer if they have certain information. Quantifiability of the information is important because unquantifiable inputs cannot be tested, and thus unquantifiable results can neither be duplicated nor contradicted. However, we know that reliable models and data are not available for all analysis.

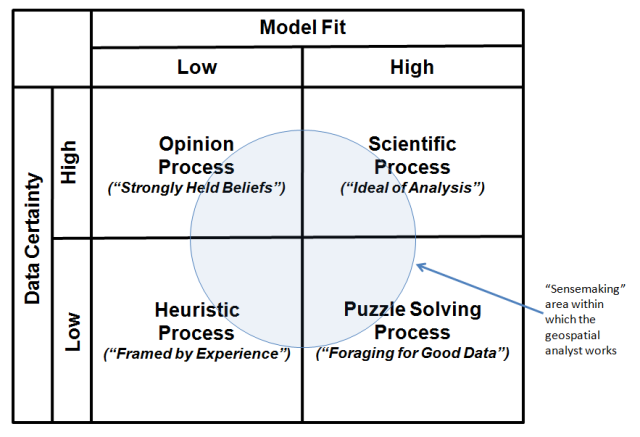

Pulling all of these thoughts together, the table in the image below categorizes the broad types of geospatial analysis. The upper right quadrantof the matrix identifies the ideal of GIS analysis as a Scientific Processin which there is good knowledge of the data and models surrounding an output. In the model, analysts understand the problem that confronts them and can take into account the key factors that bear on the problem. The notion of fixed-in-advance standard procedures typically plays an important role in such geospatial analysis.

However, many of the analytic tasks in geospatial intelligence fall outside of the scientific quadrant. Consider the Puzzle Solving Process (lower right) quadrant in which there is agreement on models, but disagreement on data. The notion of "foraging" for the data to solve the problem plays an important role in such analysis.

Analysis as an Opinion Process (upper left quadrant) is the opposite. In this analytic environment, there is agreement on data, but disagreement on model. Analysis is characterized by analysts involved in a struggle for influence, and decisions emerge from that struggle. This kind of analysis necessitates bargaining, accommodation, and consensus, as well as controversy. The bottom line is that conclusions are most often the result of bargaining between diverse and strongly held beliefs.

Intelligence analysis as a Heuristic Process (lower left quadrant) is the most contentious, with disagreement on data and models. Under these conditions, science and technology tools have significantly less direct relevance. Here, conclusions depend on parameters that change over the period the analysis is being made. As a consequence, the analytic process is experience-based. In the end, this is the framing of questions. They can only be framed, not solved, and thus the logic of argument and analysis is as important as the evidence.

Click to expand to provide more information

The "sensemaking" area within which the geospatial analyst works is the Puzzle Solving Process area.

| Model Fit - Low | Model Fit - High |

|---|---|

| Opinion Process ("strongly held beliefs") Data Certainty: high |

Scientific Process ("Ideal of Analysis") Data Certainty: high |

| Heuristic Process ("Framed by Experience") Data Certainty: low |

Puzzle Solving Process ("Foraging for Good Data") Data Certainty: high |

Is geospatial intelligence an art or science? Analytic problems can fall into any of the four quadrants ---- you, the analyst, need to understand the problem solving environment and the nature of the problem solving process. The term “sensemaking” is used as a term to describe the analysis process and incorporates traits associated with the classical definitions of both “art” and “science.” Sensemaking is more formally defined as the deliberate effort to understand events using explanatory structure that defines entities by describing their relationship to other entities. Data elicit and help to construct the frame; the frame defines, connects and filters the data.

Chapter 2 - Structured Geospatial Analytic Method (SGAM)

Chapter 2 Overview

Analysts learn by doing, and the best analysts learn from their mistakes. However, mistakes in intelligence work are dreaded, and one never wants to hear the words "intelligence failure." Intelligence failures are often disastrous, and lives may be lost. It is important, therefore, to constantly work at improving the mind and never accept old habits of thinking. Methods of thought have evolved with respect to intelligence analysis, but they appear to have largely excluded geospatial analytics.

Dr. Rob Johnston in his work Analytic Culture in the US Intelligence Community: An Ethnographic Study (2005) finds no baseline standard analytic method for the Intelligence Community. He also finds the validation of data is questionable, and there is much more emphasis on avoiding error than in-depth analysis. Overall, his research suggests the need for serious study of analytic methods in the communities of practice.

It has also been my experience that there is no baseline standard analytic method for geospatial analysis. The most common practice is to develop a workflow. If the results are reviewed, it is usually conducted as a limited peer review on the basis of previous workflows. This likely produces a bias toward confirming earlier views.

While we discuss critical thinking, the validation of input geospatial data is questionable. Dr. Rob Johnston also points out that none of the analytic agencies knows much about the analytic techniques of others, and there tends to be an over emphasis on writing and communication skills rather than on analytic methods.

Base Theory of SGAM

The Structured Geospatial Analytical Method (SGAM) is offered to solve the wicked problem of teaching the cognitive skills needed to approach geospatial analysis. The SGAM’s model is a first step utilizing a sequential process where progress is seen as flowing steadily through the steps. There are known disadvantages of such sequential process models. Foremost, the flow most probably does not represent the natural cognitive problem solving process. Further, the problems in one step are never solved completely and many problems regarding a particular step arise after the step is completed. Acknowledging these limitations, it is our argument that the SGAM’s simple approach is necessarily more understandable for the novice and, therefore, more effective in teaching inexperienced analysts. Rather than what the master analysts sees as a creative problem solving chaos (which by definition is unrepeatable and therefore unteachable), the SGAM model provides a teachable structured approach for the inexperienced analyst; a model that progresses linearly through discrete, easily understandable and explainable steps.

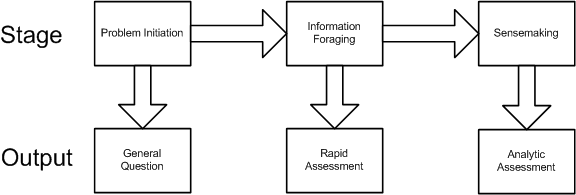

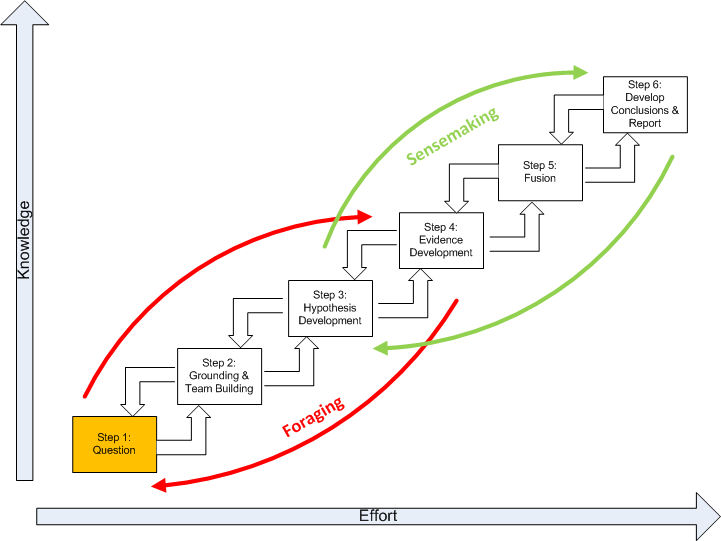

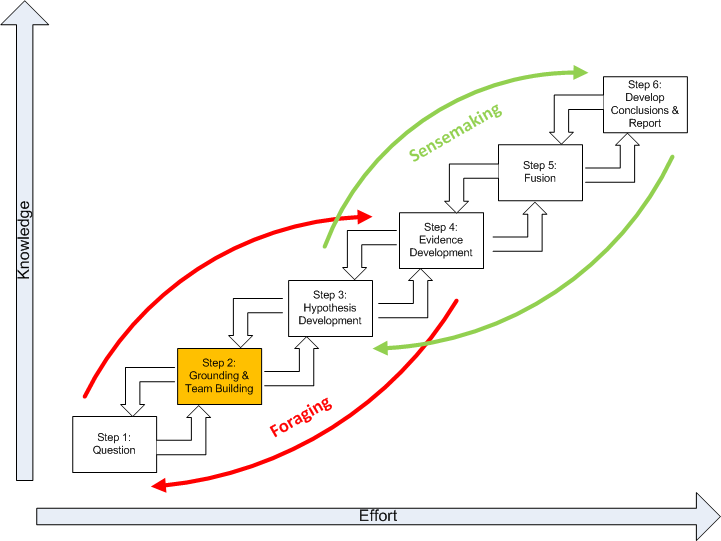

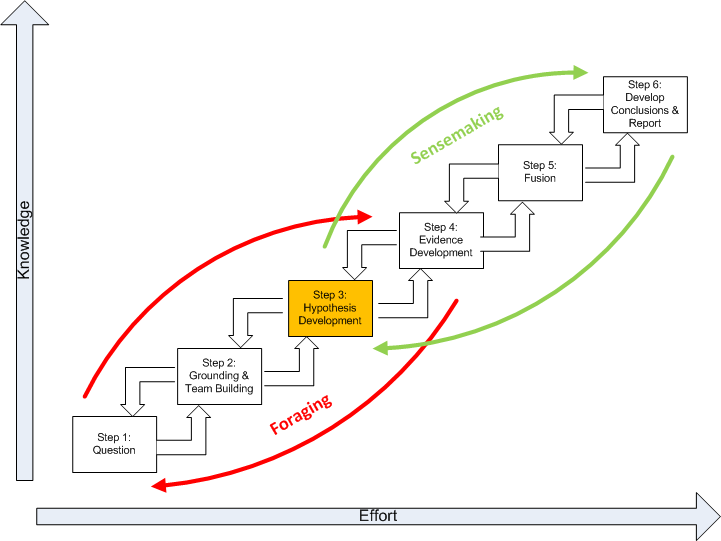

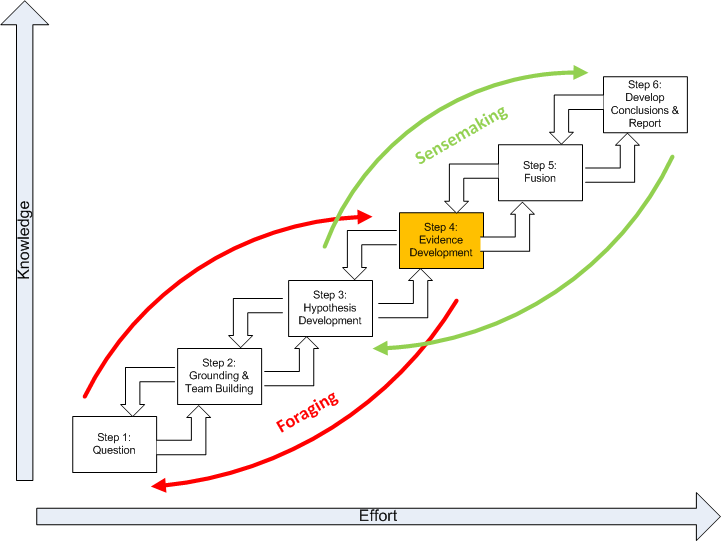

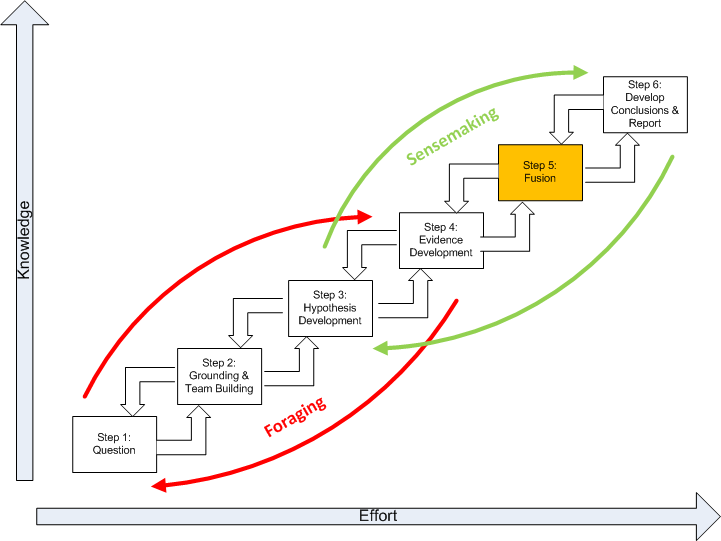

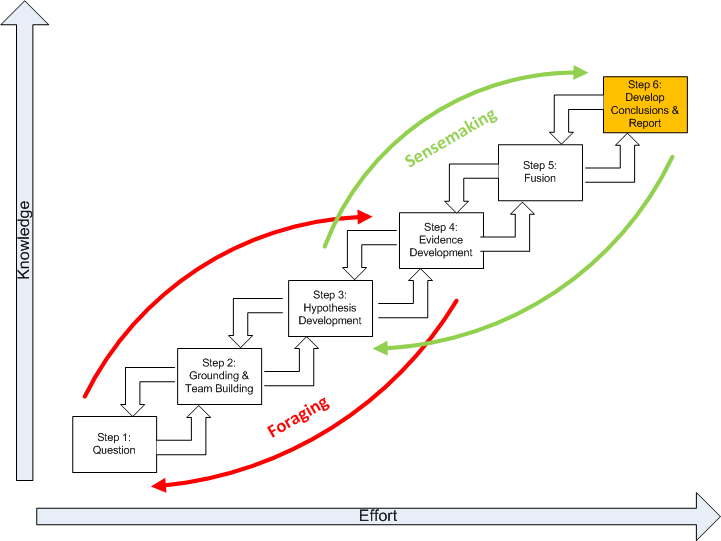

The method is based upon three stages and organized into two major loops. The three stages and associated outputs are:

Analytic Stage 1: Problem Initiation. This stage broadly outlines the general analytic question.

Analytic Stage 2: Information foraging. This stage refines the general question to understand the geospatial aspects and results in the development of a rapid assessment based on informal methods or experience, and employing a form of trial and error iteration.

Analytic Stage 3: Sensemaking. This stage results in the development of a detailed analytic assessment utilizing an established methodology.

The two major loops are a:

- Foraging loop aimed at seeking information, searching and filtering it, and reading and extracting information.

- Sensemaking loop that involves iterative development of a mental model from the schema that best fits the evidence.

The foraging loop recognizes that analysts tended to forage for data by beginning with a broad set of data and then proceeded to narrow that set down into successively smaller, higher-precision sets of data, before analyzing the information (Pirolli,1999). The three foraging actions of exploring for new information, narrowing the set of items that have been collected, and exploiting items in the narrowed set trade off against one another under deadline or data overload constraints. It is important to note that much geospatial intelligence work never departs the foraging loop and simply consists of extracting information and repackaging it without much actual analysis and is in practice a rapid assessment of the question.

Sensemaking is the ability to make sense of an ambiguous situation; it is creating situational awareness and understanding in situations of high complexity or uncertainty in order to make decisions. It is "a motivated, continuous effort to understand connections (which can be among people, places, and events) in order to anticipate their trajectories and act effectively" (Klein, G., Moon, B. and Hoffman, R.F. 2006. Making sense of sensemaking. IEEE Intelligent Systems, 21(4), 70-73. ). When geospatial intelligence analysis departs the foraging loop and completes the sensemaking process, this yields an analytic assessment.

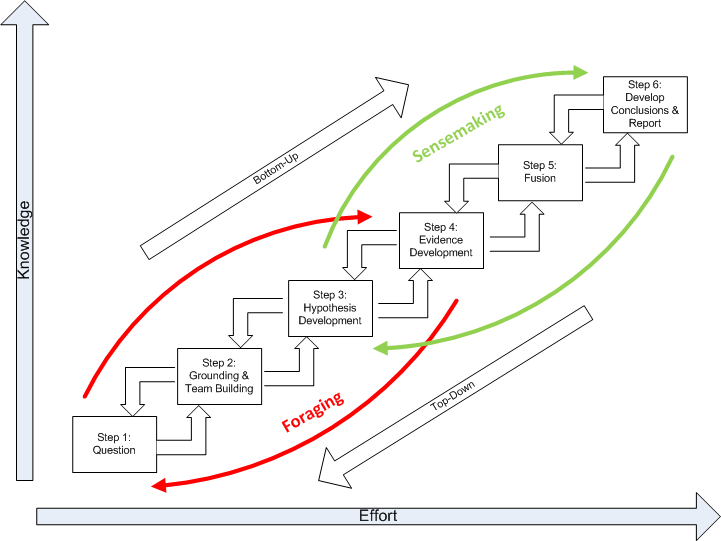

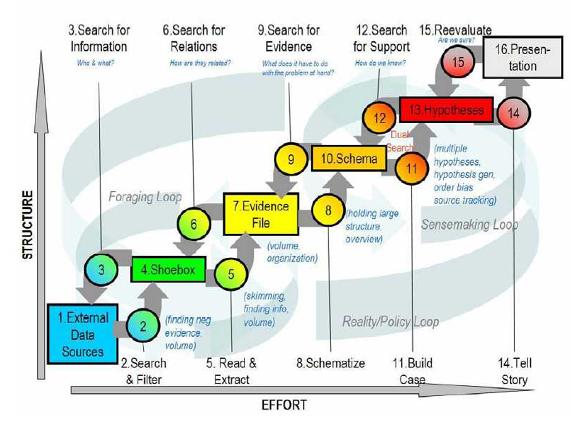

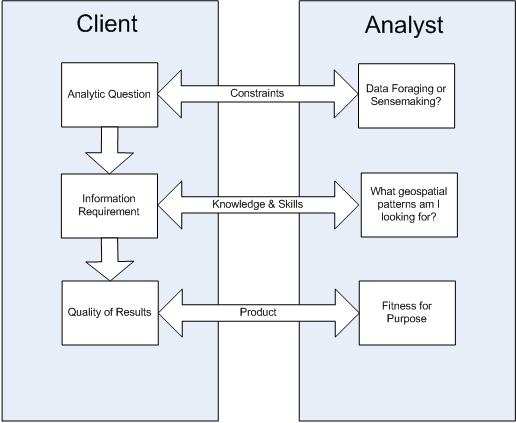

The below figure represents the Structured Geospatial Analytic Process derived from and incorporating aspects of both Heuer’s ACH and Pirolli and Card's sensemaking process. This is a generalized view of the geospatial analysis process that fits within the larger intelligence process. The rectangular boxes represent analytic activities. The arrows represent the flow from one activity to the next. The activities are arranged by degree of effort and degree of information structure. The overall analytic method has back loops. One set of activities focuses around finding information and another set of activities focuses on making sense of the information.

The diagram summarizes how an analyst comes up with new information. The data flow shows the transformation of information as it flows from raw information to reportable results through the following steps:

Question. Developing the question is a two-way interface between the client requiring information and the geospatial analyst supplying it.Critically, the question defines the broad nature of the spatial and temporal patterns the analyst is seeking to ultimately identify.

Grounding and Team Building. Grounding is the raw evidence that reaches the analyst. Grounding is building a potential repertoire of prototypical geospatial and temporal patterns from which a number of hypothetical (possible alternative) patterns will be selected. Step 2 is where the analytic team is formed.

Hypothesis Development. Hypotheses are the tentative representation of conclusions with supporting arguments. This step involves selecting all the reasonably possible geospatial and temporal patterns that might match the pattern envisioned during the development of your question.

Evidence Development. Evidence refers to snippets extracted from items discovered in the grounding. Development of the evidence includes developing and applying Schemas, which are the representation or organized marshaling of the information so that it can be used more easily to draw conclusions. This includes developing a smaller subset, which Pirolli and Card call the “shoebox”, of the data that are relevant for processing. Much of geospatial intelligence work never departs the foraging loop (Steps 1-4) and simply consists of extracting information and repackaging it without much actual analysis. In short, evidence is the development and accumulation of all facts to reject the hypothetical geospatial and temporal patterns determined in Step 3. GIS assists in the development and accumulation of the facts.

Fusion. The multi modal (graphical and text) nature of geospatial intelligence data analysis, which is used to reduce the influence of unreliable sources, is essentially a fusion process. Fusion in this step uses the ACH process to combine graphical and textual data, to achieve inferences, which will be more efficient and potentially more accurate than if they were achieved by means of a single source. Simply put, the fusion process is the comparing of the evidence to each hypothetical geospatial and temporal pattern to determine consistency.

Conclusions. The conclusion is a proposition about which hypothetical pattern(s) is (are) most consistent with the evidence and answers the question. Ultimately there is a presentation or other work product.

Basically, the data flow represents the converting of raw information into a form where expertise can apply, and then out to another form suited for communication. Information processing can be driven by bottom-up processes (from data to theory) or top-down (from theory to data). The bottom-up process is as described in steps 1 through 6. The top down process is slightly different in that it follows the sequence of:

- Evaluate conclusion. Inquiries from clients or indicators from signposts may generate re-evaluations of the current conclusions developed by an analyst. This may also require the marshaling of additional evidence to support or disconfirm the analysis or the generation and testing of alternative outcome.

- Deconstruct the synthesis. Reexamine the table of hypothesis and evidence beginning with the rankings.

- Examine the evidence. Reexamine collected evidence or search for new evidence. Search for nuggets of information that may suggest new geospatial or temporal patterns that generate hypotheses about plausible relations among entities and events.

- Re-evaluate the hypotheses. Looking for new hypotheses may generate new searches, further data extraction or a search for additional raw data.

- Question your grounding in the problem. New hypotheses may cause analysts to broaden their grounding in prototypical geospatial and temporal patterns.

- Question the question. Revalidate with the client the nature of the geospatial and temporal patterns the analyst is ultimately seeking to identify. Re-examine the process, use of tools, and quality.

Key Definitions and Concepts

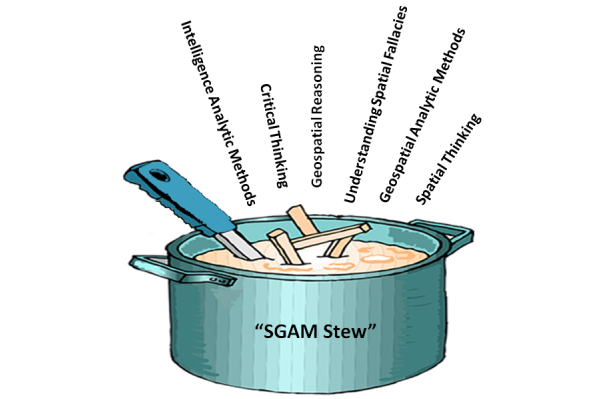

A number of "ingredients" (concepts) have been used in the development of the Structured Geospatial Analysis Method "stew." It is difficult to understand how to apply the method without understanding the ingredients and their associated qualities. The following is a brief discussion of each ingredient for your general reference:

- critical thinking

- spatial thinking

- understanding spatial fallacies

- geospatial reasoning

- analytic methods

- geospatial analytic methods

Recipe for SGAM Stew

Ingredients

- 1 tablespoon of critical thinking

- 1 1/2 pounds intelligence analytic methods

- 1/2 cup geospatial analytic methods

- 1/2 cup sliced understanding spatial fallacies

- 3 cups spatial thinking

- 1 cup geospatial reasoning

- salt and pepper, to taste

Preparation:

In a large saucepan brown the intelligence analytic method; add the geospatial analytic method and sauté for 3 to 5 minutes longer. Add reasoning and spatial thinking; bring to a boil. Reduce heat to low, cover, and simmer for 1 to 1 1/2 hours. Add geospatial reasoning; simmer for about 30 to 40 minutes longer, or until tender. Add drained critical thinking; continue cooking for 5 to 10 minutes.

In a small bowl or cup, combine additional spatial thinking and geospatial reasoning with cold water until smooth. Add the mixture to the simmering broth, a little at a time, until stew is thickened. Taste and add salt and pepper. Serve with hot buttered presentations.

Critical Thinking

There is a great deal of confusion about what critical thinking is and its relationship to an analytical method. Much of the confusion is because there are many definitions of critical thinking. According to Cohen and Salas (Marvin S. Cohen and Eduardo Salas, Critical Thinking: Challenges, Possibilities, and Purpose, March 2002), definitions in the literature suggest that a common core meaning exists, and one might define critical thinking as:

The deliberate evaluation of intellectual products in terms of an appropriate standard of adequacy.

Related to this definition is a theme of early philosophers, such as Descartes, Locke, Berkeley, and Hume, of the importance of challenging inherited and customary beliefs. In other words, to adopt not only a first-person, but also a second-person critical point of view. This imperative of doubting one’s own accepted beliefs is critical thinking. The early philosophers agreed on two things about critical thinking:

- Its purpose is to fulfill an ethical duty to think properly about whether to accept or reject each of our beliefs.

- A constraint on proper thinking about belief acceptance is that it must be based upon good evidence.

Initially evidence was regarded as sufficient only if it guaranteed the truth of a conclusion. Today, theorists acknowledge uncertainty about matters of fact and even about logic. The purpose of critical thinking is, therefore, now seen as to ensure a high probability of truth.

More recently, in 2002, Robert H. Ennis, Retired Director, Illinois Critical Thinking Project, wrote that, "Critical thinking is here assumed to be reasonable reflective thinking focused on deciding what to believe or do. This rough overall definition is, we believe, in accord with the way the term is generally used these days. Under this interpretation, critical thinking is relevant not only to the formation and checking of beliefs, but also to deciding upon and evaluating actions. It involves creative activities such as formulating hypotheses, plans, and counterexamples; planning experiments; and seeing alternatives. Furthermore, critical thinking is reflective -- and reasonable. The negative, harping, complaining characteristic that is sometimes labeled by the word 'critical' is not involved."

In his piece, Super-Streamlined Conception of Critical Thinking, Robert H. Ennis, points out that a critical thinker:

- is open-minded and mindful of alternatives;

- tries to be well-informed;

- judges well the credibility of sources;

- identifies conclusions, reasons, and assumptions;

- judges well the quality of an argument, including the acceptability of its reasons, assumptions, and evidence;

- can well develop and defend a reasonable position;

- asks appropriate clarifying questions;

- formulates plausible hypotheses; plans experiments well;

- defines terms in a way appropriate for the context;

- draws conclusions when warranted, but with caution; and

- integrates all items in this list when deciding what to believe or do.

Richard Paul has further defined it as:

Critical thinking is that mode of thinking – about any subject, content or problem – in which the thinker improves the quality of his or her thinking by skillfully taking charge of the structures inherent in thinking and imposing intellectual standards upon them. (Paul, Fisher and Nosich, 1993, p.4)

Alec Fisher, Critical Thinking: An Introduction, Cambridge University Press, points out that, "This definition draws attention to a feature of critical thinking on which teachers and researchers in the field seem to be largely agreed, that the only way to develop one's critical thinking ability is through 'thinking about one's thinking' (often called 'metacognition'), and consciously aiming to improve it by reference to some model of good thinking in that domain."

The essence is that critical thinking in geospatial intelligence is exemplified by asking questions about alternative possibilities in order to achieve some objective analysis rendering a high probability of the selected alternative being true.

Spatial Thinking

To paraphrase William Millwood, creating geospatial analysis requires transformations resulting from an intellectual endeavor that sorts the significant from the insignificant, assessing them severally and jointly, and arriving at a conclusion by the exercise of reasoned judgment. This endeavor when dealing with geospatial problems is geospatial reasoning, or an operation in which present facts suggest other facts. Geospatial reasoning creates an objective connection between our present geospatial beliefs and the evidence for believing something else.

Spatial thinking includes processes that support exploration and understanding. An expert spatial thinker visualizes relations, imagines transformations from one scale to another, mentally rotates an object to look at its other sides, creates a new viewing angle or perspective, and remembers images in places and spaces. Spatial thinking also allows us to externalize these operations by creating representations such as a map.

Spatial thinking begins with the ability to use space as a framework. An object can be specified relative to the observer, to the environment, to its own intrinsic structure, or to other objects in the environment. Each instance requires the adoption of specific spatial frames of reference or context. The process of interpretation begins with data which is generally context-free numbers, text, or symbols. Information is derived from data by implying some degree of selection, organization, and preparation for a purpose — in other words, the data is placed into a spatial context. For example, the elevation at a specific location is an example of data; however, the elevation only has meaning when placed in context of sea level. The spatial context is critical because it is the space the data is in that ultimately determines its interpretation. There are three spatial contexts within which we can make the data-to-information transition; these include life spaces, physical spaces, and intellectual spaces. In all cases, space provides an interpretive context that gives meaning to the data.

- Life space is the four-dimensional space-time where spatial thinking is a means of coming to grips with the spatial relations between self and objects in the physical environment. This is cognition in space and involves thinking about the world in which we live. It is exemplified by navigation and the actions that we perform in space.

- Physical space is also built on the four-dimensional world of space-time, but focuses on a scientific understanding of the nature, structure and function of phenomena. This is cognition about space and involves thinking about the ways in which the "world" works. An example might be how an earthquake creates a tsunami.

- Intellectual space is in relationship to concepts and objects that are not in and of themselves necessarily spatial, but the nature of the space is defined by the particular problem. This is cognition with space and involves thinking with or through the medium of space in the abstract. An example might be the territorial dispute between two ethnic groups.

Learning to think spatially is to consider objects in terms of their context. This is to say, the object's location in life space, physical space, or intellectual space, to question why objects are located where they are, and to visualize relationships between and among these objects. The key skills of spatial thinking include the ability to:

- Understand the context. The significance of context was discussed above, but it is important to say that if the data upon which the decision is based are placed into the wrong spatial context, for example, life space rather than intellectual space, it is likely the analysis will be flawed.

- Recognize spatial schemes (patterns and shapes). The successful spatial thinker needs to retain an image of the simple figure in mind, and look for it by suppressing objects irrelevant to a task at hand. This ability allows a geospatial analyst to identify patterns of significance in a map, such as an airfield.

- Recall previously observed objects. The ability to recall an array of objects that was previously seen is called object location memory.

- Integrate observation-based learning. Synthesizing separately made observations into an integrated whole. The expert analyst moves through the data, gathering information from separately observed objects and views, and integrates this information into a coherent mental image of the area.

- Mental rotating an object and envisioning scenes from different viewpoints. The ability to imagine and coordinate views from different perspectives has been identified by Piaget and Inhelder (1967) as one of the major instances of projective spatial concepts. Mental-rotation ability or perspective-taking ability could be relevant to those analysis tasks that involve envisioning what an object, such as a building, would look like if seen from another position.

Golledge’s First-Order Primitives constitute a broad list of cognitive schemes for geospatial analysis (R. G. Golledge "Do People Understand Spatial Concepts: The case of First-Order Primitives", Theories and Models of Spatio-Temporal Reasoning in Geographic Space. Pisa: Springer-Verlag, 1992). The schemas are:

- Location. This includes a descriptor with identity, magnitude, location and time. An additional cognitive component might be familiarity. Occurrences are often called environmental cues, nodes, landmarks, or reference points.

- Spatial distributions. Distributions have a pattern, a density, and an internal measure of spatial variance, heterogeneity or dispersion; occurrences in distributions also have characteristics such as proximity, similarity, order, and dominance.

- Regions. Areas of space in which either single or multiple features occur with specified frequency (uniform regions) or over which a single feature dominates.

- Hierarchies. Multiple levels or nested levels of phenomena including features.

- Networks. Linked features having characteristics, connectivity, centrality, diameter, and density. Networks may also include physical links such as transportation systems, or non-visual systems.

- Spatial associations. Associations include spatial autocorrelation, distance decay, and contiguities. Examples of these associations include interaction frequencies or geographic and areal associations. For example, the coincidence of features within specific areas (i.e., squirrels are normally near trees) is a spatial association.

- Surfaces. There are generalizations of discrete phenomena, including densities of occurrence, flows over space and through time (as in the spatial diffusion of information or phenomena).

Geospatial Reasoning

Reasoning

The three well known reasoning processes trace the development of analytic beliefs along different paths. Inductive reasoning reveals “that something is probably true," deductive reasoning demonstrates “that something is necessarily true.” It is generally accepted within the intelligence community that both are limited: inductive reasoning leads to multiple, equally likely solutions, and deductive reasoning is subject to deception. Therefore, a third aid to judgment, abductive reasoning, showing “that something is plausibly true,” is used to offset the limitations of the others. While analysts who employ all three guides to sound judgment stand to be the most persuasive, fallacious reasoning or mischaracterization of rules, cases, or results in any of the three can affect reasoning using the others.

- Inductive reasoning, moving from the specific case to the general rule, suggests many possible outcomes, or the range of what might happen in the future. However, inductive reasoning lacks a means to distinguish among outcomes. An analyst has no way of knowing whether a solution is correct.

- Deductive reasoning, on the other hand, moves from the general to the specific. Deductive reasoning becomes essential for predictions. Based on past perceptions, certain facts indicate specific outcomes. If, for example, troops are deployed to the border, communications are increased, and leadership is in defensive bunkers, then war is imminent. However, if leadership remains in the public eye, then these preparations indicate that an exercise is imminent.

- Abductive reasoning reveals plausible outcomes. Abductive reasoning is the process of generating the best explanation for a set of observations. When actions defy accurate interpretation through existing paradigms, abductive reasoning generates novel means of explanation. In the case of predictions, an abductive process presents an “assessment of probabilities.” Although abduction provides no guarantee that the analyst has chosen the correct hypothesis, the probative force of the accompanying argument indicates that the most likely hypothesis is known and that actionable intelligence is being developed.

Geospatial Reasoning

It is not too far of a stretch to say that people who are drawn to the discipline of geospatial intelligence have minds accustomed to assembling information into three-dimensional mental schemas. We construct schemas in our mind, rotate them, and view them from many angles. Furthermore, the experienced geospatial professional imagines spatial schemas influenced in the fourth dimension, time. We mentally replay time series of the schema. So easy is the geospatial professional’s ability to assemble multidimensional models that the expert does it with incomplete data. We mentally fill in gaps, making an intuitive leap toward a working schema with barely enough data to perceive even the most rudimentary spatial patterns. This is a sophisticated form of geospatial reasoning. Expertise increases with experience because as we come across additional schemas, our mind continuously expands to accommodate them. This might be called spatial awareness. Being a visual-spatial learner, instead of feeling daunted by the abundance and complexity of data, we find pleasure in recognizing the patterns. Are we crazy? No, this is what is called a visual-spatial mind. Some also call these people right brain thinkers.

The concept of right brain and left brain thinking developed from the research of psychobiologist Roger W. Sperry. Sperry discovered that the human brain has two different ways of thinking. The right brain is visual and processes information in an intuitive and simultaneous way, looking first at the whole picture then the details. The left brain is verbal and processes information in an analytical and sequential way, looking first at the pieces then putting them together to get the whole. Some individuals are more whole-brained and equally adept at both modes.

The qualities of the Visual-Spatial [4] person are well documented but not well known . Visual-spatial thinkers are individuals who think in pictures rather than in words. They have a different brain organization than sequential thinkers. They are whole-part thinkers who think in terms of the big picture first before they examine the details. They are non-sequential, which means that they do not think and learn in the step-by-step manner. They arrive at correct solutions without taking steps. They may have difficulty with easy tasks, but show a unique ability with difficult, complex tasks. They are systems thinkers who can orchestrate large amounts of information from different domains, but they often miss the details.

Sarah Andrews [5] likens some contrasting thought processes to a cog railway. Data must be in a set sequence in order to process it through a workflow. In order to answer a given question, the thinker needs information fed to him in order. He will apply a standardized method towards arriving at a pragmatic answer, check his results, and move on to the next question. In order to move comfortably through this routine, he requires that a rigid set of rules be in place. This is compared with the geospatial analyst who grabs information in whatever order, and instead of crunching down a straight-line, formulaic route toward an answer, makes an intuitive, mental leap toward the simultaneous perception of a group of possible answers. The answers may overlap, but none are perfect. In response to this ambiguity, the geospatial analyst develops a risk assessment, chooses the best working answer from this group, and proceeds to improve the estimate by gathering further data. Unlike, the engineer, whose formulaic approach requires that the unquestioned authority of the formula exist in order to proceed, the geospatial intelligence professional questions all authority, be it in the form of a human or acquired data.

Analytic Methods in General

It is Sherman Kent, who has been described as the "father of intelligence analysis", that is often acknowledged as first proposing an analytic method specifically for intelligence. [6] The essence of Kent’s method was understanding the problem, data collection, hypotheses generation, data evaluation, more data collection, followed by hypotheses generation (Kent, S. 1949, Strategic intelligence for American world policy, Princeton University Press, Princeton, NJ.).

Richards Heuer subsequently proposed an ordered eight step model of “an ideal” analytic process, emphasizing early deliberate generation of hypotheses prior to information acquisition (Heuer, R. 1981, "Strategies for analytical judgment," Studies in Intelligence, Summer, pp. 65-78.):

- Definition of the analytical problem

- Preliminary hypotheses generation

- Selective data acquisition

- Refinement of the hypotheses and additional data collection

- Data intervention and evaluation

- Hypotheses selection

- Continued monitoring

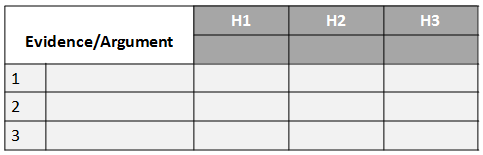

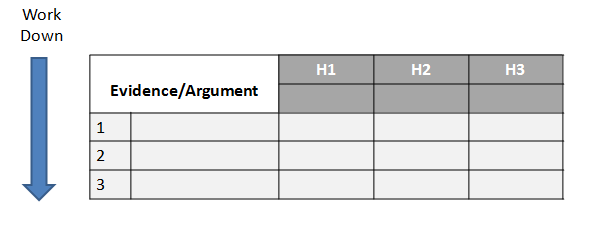

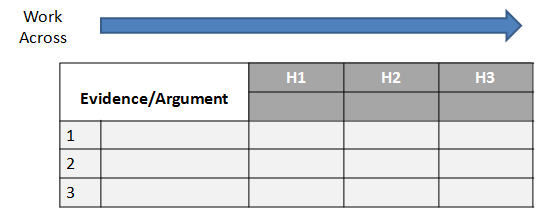

Heuer’s technique has become known as Analysis of Competing Hypotheses (ACH). The technique entails identifying possible hypotheses by brainstorming, listing evidence for and against each, analyzing the evidence and then refining hypotheses, trying to disprove hypotheses, analyzing the sensitivity of critical evidence, reporting conclusions with the relative likelihood of all hypotheses, and identifying milestones that indicate events are taking an unexpected course. The use of brainstorming is critical since the quality of the hypotheses is dependent on the existing knowledge and experience of the analysts, since hypotheses generation occurs before additional information acquisition augments the existing knowledge of the problem. ACH is widely cited in the intelligence literature as a means for improving analysis. The primary advantage of ACH is a consistent approach for rejection or validation of many potential conclusions (or hypotheses).

Heuer acknowledges how mental models, or mind sets, are essentially the re-representations of how analysts perceive information (Heuer, Richards J. Jr. & Center for the Study of Intelligence 1999, Psychology of intelligence analysis, Center for the Study of Intelligence, Central Intelligence Agency, Washington, DC.). Even though every analyst sees the same piece of information, it is interpreted differently due to a variety of factors (past experience, education, and cultural values to name merely a few). In essence, one's perceptions are morphed by a variety of factors that are completely out of the control of the analyst. Heuer sees mental models as potentially good and bad for the analyst. On the positive side, they tend to simplify information for the sake of comprehension, but they also obscure genuine clarity of interpretation.

ACH has evolved into an eight-step procedure based upon cognitive psychology, decision analysis, and the scientific method. It is believed to be particularly appropriate for establishing an audit trail to show what an analyst considered and how they arrived at their judgment. (Heuer, Richards J. Jr. & Center for the Study of Intelligence 1999, Psychology of intelligence analysis, Center for the Study of Intelligence, Central Intelligence Agency, Washington, DC.).

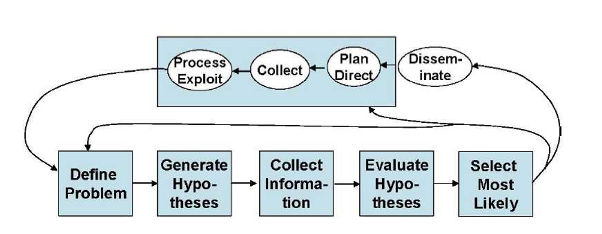

Heuer’s approach is the prevailing view of the analysis process. Figure 1 by the 2002 Joint Military Intelligence College (JMIC) illustrates the integration of the fundamentals of ACH into the intelligence process (Waltz, E. 2003, Toward a MAUI NITE intelligence analytic process model, Veridian Systems, Arlington, VA.).

Figure 1 is particularly significant since it shows the intelligence cycle steps of:

- planning and direction,

- collection,

- processing,

- analysis, and

- dissemination

Which incorporates the following analytic process steps within the analysis steps:

- define the problem,

- develop hypotheses,

- collect information,

- evaluate hypotheses, and

- select the most likely alternative.

Since Heuer’s development of ACH, another model of the intelligence analysis process is proposed by Pirolli in 2006 which was derived from the results of a cognitive task analysis of intelligence analysts (Pirolli, P.L. 2006, Assisting people to become independent learners in the analysis of intelligence: final technical report, Palo Alto Research Center, Inc., Palo Alto, CA.). The analytic process is described as “A Notional Model of Analyst Sensemaking,” with the cognitive task analysis indicating that the bottom-up and top-down processes shown in each loop are “…invoked in an opportunistic mix.” (Pirolli, P. & Card, S.K. 2006, The sensemaking process and leverage points for analyst technology identified through cognitive task analysis, Palo Alto Research Center, Inc., Palo Alto, CA.). Figure 2 illustrates this process./p>

The term “sensemaking” is used as a term to describe the analysis process.Sensemaking is defined “…as the deliberate effort to understand events,” describing the elements of sensemaking using the terms “data” and “frame.” A frame is “…an explanatory structure that defines entities by describing their relationship to other entities” (Klein, G., Phillips, J.K., Rall, E.L. & Peluso, D.A. 2007, "A data-frame theory of sensemaking" in Expertise out of context, ed. R.R. Hoffman, pp. 113-15). The Klein article further explains that “The data identify the relevant frame, and the frame determines which data are noticed. Neither of these comes first. The data elicit and help to construct the frame; the frame defines, connects and filters the data.”

Pirolli and Card contend that many forms of intelligence analysis are sensemaking tasks. As figure 2 illustrates, such sensemaking tasks consist of information gathering, re-representation of the information in a schema that aids analysis, the development of insight through the manipulation of this representation, and the creation of some knowledge based on the insight. The analyst proceeds through the process of:

They also suggested that the process may be reversed to:

In other words, in terms of Figure 2, the process can be a mix: top-down and/or bottom-up.

Schemas are the re-representation or organized marshaling of the information so that it can be used more easily to draw conclusions. Pirolli and Card note that the re-representation “may be informally in the analyst’s mind or aided by a paper and pencil or computer-based system” (Pirolli, P. & Card, S.K. 2006, The sensemaking process and leverage points for analyst technology identified through cognitive task analysis, Palo Alto Research Center, Inc., Palo Alto, CA.).

Geospatial Analytic Methods

Geospatial Preparation of the Environment (GPE)

- The geospatial intelligence preparation of the environment (GPE) analytic method is based on the intelligence cycle and process. According to the National Geospatial-Intelligence Agency(NGA) [7], the steps are:

- 1. Define the Environment: Gather basic facts needed to outline the exact location of the mission or area of interest. Physical, political, and ethnic boundaries must be determined. The data might include grid coordinates, latitude and longitude, vectors, altitudes, natural boundaries (mountain ranges, rivers, shorelines), etc. This data serves as the foundation for the GEOINT product.

- 2. Describe Influences of the Environment: Provide descriptive information about the area defined in Step 1. Identify existing natural conditions, infrastructure, and cultural factors. Consider all details that may affect a potential operation in the area: weather, vegetation, roads, facilities, population, languages, social, ethnic, religious, and political factors. Layer this information onto the foundation developed in Step 1.

- 3. Assess Threats and Hazards: Add intelligence and threat data, drawn from multiple intelligence disciplines, onto the foundation and descriptive information layers (the environment established in the first two steps). This information includes: order-of-battle; size and strength of enemy or threat; enemy doctrine; nature, strength, capabilities and intent of area insurgent groups; effects of possible chemical/biological threats. Step 3 requires collaboration with national security community counterparts.

- 4. Develop Analytic Conclusions: Integrate all information from Steps 1-3 to develop analytic conclusions. The emphasis is on developing predictive analysis. In Step 4, the analyst may create models to examine and assess the likely next actions of the threat, the impact of those actions, and the feasibility and impact of countermeasures to threat actions.

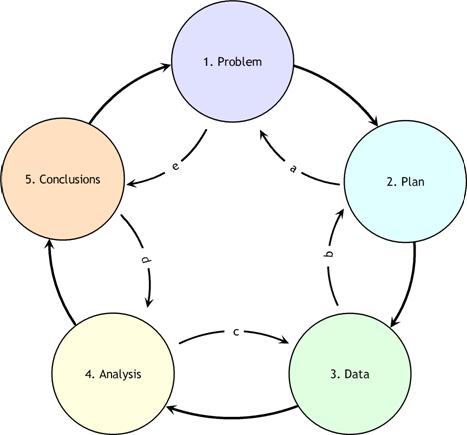

PPDAC: Problem, Plan, Data, Analysis, and Conclusions

De Smith and Goodchild [8] examined geospatial analysis process in the broader context of analytical methodologies. The typical process of geospatial analysis typically follows a number of well-defined and iterative stages:

- problem formulation;

- planning;

- data gathering;

- exploratory analysis;

- hypothesis formulation;

- modeling;

- consultation and review; and

- ultimately, final reporting and/or implementation.

In the whole, geospatial analysis can be seen as part of a decision process and support infrastructure. The process from problem specification to outcome is, in reality, an over-simplification, and the analytical process is more complex and iterative than the steps suggest. GIS and related software tools that perform analytical functions only address data gathering, analysis, and modeling. As de Smith and Goodchild point out, the flow from start to finish is rarely the case. Not only is the process iterative, but at each stage one often looks back to the previous step and re-evaluates the validity of the decisions made. Mackay and Oldford in de Smith and Goodchild [9]described a spatial analysis method in terms of a sequence of steps labeled PPDAC: Problem; Plan; Data; Analysis; and Conclusions. The PPDAC approach is shown in the below figure.

As can be seen from the diagram, although the clockwise sequence (1→5) applies as the principal flow, each stage may, and often will, feed back to the previous stage. In addition, it may well be beneficial to examine the process in the reverse direction, starting with Problem definition and then examining expectations as to the format and structure of the Conclusions. This procedure then continues, step-by-step, in an anti-clockwise manner (e→a) determining the implications of these expectations for each stage of the process.

PPDAC develops evidence. Evidence, in the context of this discussion, refers to the information that is gathered by exploratory analysis of spatial and temporal data. These methods include remote sensing and GIS to develop intermediate products. "Exploratory data analysis (EDA) is about detecting and describing patterns, trends, and relations in data, motivated by certain purposes of investigation. As something relevant is detected in data, new questions arise, causing specific parts to be viewed in more detail. So EDA has a significant appeal: it involves hypothesis generation rather than mere hypothesis testing" (Exploratory Analysis of Spatial and Temporal Data, Springer, 2006). Ultimately, what is evidence is defined by the intelligence producer. Ideally, "evidence" in the context of the framework of the problem should include: the context and the scientific and intuitive evidence.

Understanding Spatial Fallacies

Complex issues in spatial analysis lead to bias, distortion and errors. These issues are often interlinked but various attempts have been made to separate out particular issues from each other. Here is a brief list:

Known Length - Lengths in earth measurement depend directly on the scale at which they are measured and experienced. So while we measure the length of a river, streetet cetera, this length only has meaning in the context of the relevance of the measuring technique to the question under study.

Locational Fallacy - The locational fallacy refers to error due to the particular spatial characterization chosen for the elements of study, in particular choice of placement for the spatial presence of the element. Spatial characterizations may be simplistic or even wrong. Studies of humans often reduce the spatial existence of humans to a single point, for instance their home address. This can easily lead to poor analysis, for example, when considering disease transmission which can happen at work or at school and therefore far from the home. The spatial characterization may implicitly limit the subject of study. For example, the spatial analysis of crime data has recently become popular but these studies can only describe the particular kinds of crime which can be described spatially. This leads to many maps of assault but not to any maps of embezzlement with political consequences in the conceptualization of crime and the design of policies to address the issue.

Atomic Fallacy - This describes errors due to treating elements as separate 'atoms' outside of their spatial context.

Ecological Fallacy - The ecological fallacy describes errors due to performing analyses on aggregate data when trying to reach conclusions on the individual units. It is closely related to the modifiable areal unit problem.

Modifiable areal unit problem - The modifiable areal unit problem (MAUP) is an issue in the analysis of spatial data arranged in zones, where the conclusion depends on the particular shape or size of the zones used in the analysis. Spatial analysis and modeling often involves aggregate spatial units such as census tracts or traffic analysis zones. These units may reflect data collection and/or modeling convenience rather than homogeneous, cohesive regions in the real world. The spatial units are therefore arbitrary or modifiable and contain artifacts related to the degree of spatial aggregation or the placement of boundaries. The problem arises because it is known that results derived from an analysis of these zones depends directly on the zones being studied. It has been shown that the aggregation of point data into zones of different shapes and sizes can lead to opposite conclusions. More detail is available at the modifiable areal unit problem topic entry.

Memory Aid

Spatial thinking is the essence of Geospatial Intelligence. Spatial thinking is thinking that finds meaning in the shape, size, orientation, location, direction or trajectory, of objects, processes or phenomena, or the relative positions in space of multiple objects, processes or phenomena. Geospatial thinking is spatial thinking related to the earth. An expert geospatial thinker (NAP, 2006, p. 3):

- Identifies spatial patterns within the context of life, physical, and intellectual space by examining the fundamental spatial aspects, qualities, and relationships

- Recalls similar spatial patterns

- Performs mental transformations to compare the patterns

- Assigns meaning and significance to the patterns with respect to earth phenomena

The following geospatial thinking process is simply offered as a structure to make sure that key concepts are not overlooked. Nothing here is likely new to the skilled geospatial thinker, but it is purely a reminder of the actions that can help the analyst think about geospatial problems.

Action 1: Identify the entity. This entity can be natural and human phenomena relative to the problem. For example, the DC Shooter case.

Action 2: Think about the entity in the space contexts. The definition of the spatial presence of an entity is the prerequisite for spatial thinking. The spatial context is critical because it is the space the entity is in that ultimately determines its interpretation. There are three spatial contexts within which we can make the data-to-information transition. These are:

- life space

- physical space

- intellectual space

In all cases, space provides an interpretive context that gives meaning to the data.

- Life space, or behavioral environment, is the four-dimensional space-time that provides the means of coming to grips with the spatial relations between self and objects in the physical environment. This is cognition in space and involves thinking about the world in which we live. It is exemplified by navigation and the actions that we perform in space. An example is how the DC sniper navigates through a neighborhood.

- Physical space is the four-dimensional space-time that focuses on a scientific understanding of the nature, structure, and function of phenomena. This is cognition about space and involves thinking about the ways in which the "world" works. An example is the scientific understanding of the geometry of a rifle’s projectile and how an intervening building shields a shooter’s target.

- Intellectual space is in relationship to concepts and objects that are not in and of themselves necessarily spatial, but the nature of the space is defined by the particular problem. This is cognition with space and involves thinking with or through the medium of space in the abstract. For example, Rossmo’s (1997) “hunters” who are those criminals that specifically set out from their residence to look for victims, searching through the areas in their awareness spaces that they believe contain suitable targets.

Action 3: Place the phenomena in the context of the earth. When making sense about the space (Gershmehl and Gershmehl, 2006) the spatial thinker first asks the fundamental spatial questions:

- Where is this place?

- What is at this place?

- How is this place linked to other places?

Examples are:

- The shooting occurred at Y.

- Y is the location of a gas station.

- Y is 5 miles from where event X occurred.

Action 4: Examine the qualities of the objects or events. The spatial thinking then proceeds to examine the places by asking the following questions:

- How are places similar or different?

- What effect(s) does a feature have on nearby areas?

- What nearby places are similar to each other and can be grouped together?

- Where does this place fit in a hierarchy of nested areas?

- Is the change between places abrupt, gradual, or irregular?

- What distant places have similar situations and therefore may have similar conditions?

- Are there clusters, strings, rings, waves, other non-random arrangements of features?

- Do features tend to occur together (have similar spatial patterns)?

For example, the DC shootings occurred at gas station near entrances to high speed highways. Note or remember the qualities. Return to Action 2 if you have not explored all of the space contexts.

Action 5: Recalling the results of Action 4, examine the space-time relationship between the objects and/or event. Last, the comparisons are placed into the context of space and time. Spatial thinking goes beyond a simple identification of locations. It involves comparing locations, considering the influence of nearby features, grouping regions and hierarchies, and identifying distant places that have similar conditions. It is also the consideration of change, movement and diffusion through time and place. This is spatiotemporal thinking which asks the questions:

- How do spatial features change through time?

- How do conditions change at a place over time?

- What is the change in position of something over time?

- What is the change in extent of something over time?

- Where are places that do not seem to follow an observed “rule”?

For example, the DC Sniper pattern of events seems to be spatially random. Note or remember the time-space relationships.

Action 6: Recalling the results of Actions 4 and 5. Think through your past results or lists you created.

Action 7: Transform the patterns while searching for similar spatial patterns. This is the ability to imagine and reason about objects and their spatial layout. Mental transformations that are important to geospatial intelligence cognition are: object-based spatial transformations and egocentric perspective transformations. Object-based transformations are imagined rotations or translations of objects relative to the reference frame of the environment. Egocentric perspective transformations are imagined rotations or translations of one’s point-of-view relative to that reference frame. For example, to retrieve what is on the other side of the grocery aisle you might imagine the aisle rotating or ourselves moving relative to the aisle. Another example is the mentally transforming Rossmo’s (1997) “hunters” pattern to match the observed the DC Sniper’s pattern.

Action 8: Compare qualities and relationships. A primary cognitive function of the geospatial analysts is determining the spatial relationships between features. The patterns representing prototypical hospital, school, or housing development is an example of comparing spatial relationship. For example, Rossmo’s “hunters” yields a donut-like geospatial pattern but the pattern exhibited by the DC sniper is largely random.

Action 9: Assign meaning. Interpreting and understanding a pattern is a complex reasoning process since the human mind is functioning symbolically where the human mind assigns meaning based on experience, consciousness, beliefs, and emotions. This is not direct knowledge and the symbolism is very fallible. For example, the DC Sniper’s patterns might not conform to Rossmo’s “hunters” modus operandi and there could be other valid alternative meanings assigned to the observed patterns.

Chapter 3 - Structured Analytic Techniques

Chapter 3 Overview

Geospatial analysis can be very difficult to do well. Much of the difficulty is cognitive and not related to an individual's ability to use the technical tools, i.e., GIS. It takes far greater mental agility than gathering evidence supporting a single hypothesis that was pre-judged as the most likely answer. To develop and retain multiple spatial schemes in working memory and note how each item of information fits into each hypothesis is beyond the mental capabilities of most analysts. (Note: Working memory tasks include the active monitoring or manipulation of information or behaviors.)

Moreover, truly good geospatial analysis requires monitoring your progress, making changes and adapting the ways you are thinking. It is about self-reflection, self-responsibility, and initiative to achieve the analytic results within the time allotted. This mental agility can be accomplished with the help of a few simple thinking tools discussed here.

Challenging Mindsets

Heuer makes three important points relative to intelligence in his work, the Psychology of Intelligence Analysis [1].

- Human minds are ill equipped ("poorly wired") to cope effectively with both inherent and induced uncertainty.

- Increased knowledge of one's own inherent biases tends to be of little assistance to the analyst.

- Tools and techniques that apply higher levels of critical thinking can substantially improve analysis of complex problems.

He provides the following series of images to illustrate how poorly we are cognitively equipped to accurate interpret the world.

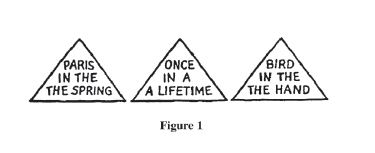

Question #1: What did you see in the figure? The answer is at the bottom of this page.

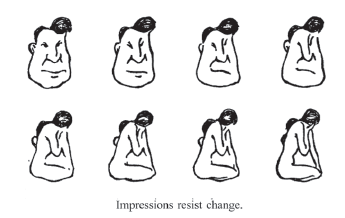

Question #2: Look at the drawing of the man in the upper right. Are the drawings all of men? The answer is at the bottom of this page.

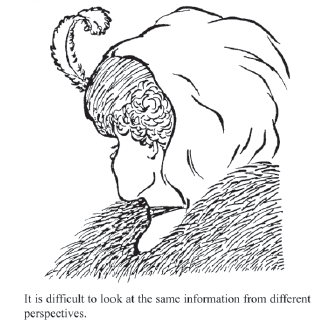

Question #3: What do you see—an old woman or a young woman? The answer is at the bottom of this page.

Now look to see if you can reorganize the drawing to form a different image of a young woman, if your original perception was of an old woman, or of the old woman if you first perceived the young one.

According to Heuer, and as the above figures illustrate, mental models, or mindsets, or cognitive patterns are essentially the analogous image by which people perceive information. Even though every analyst sees the same piece of information, it is interpreted differently due to a variety of factors. In essence, one's perceptions are morphed by a variety of factors that are completely out of the control of the analyst. Heuer sees these cognitive patterns ;as potentially good and bad for the analyst. On the positive side, they tend to simplify information for the sake of comprehension but they also bias interpretation. The key risks of mindsets are that:

- analysts perceive what they expect to perceive;

- once formed, they are resistant to change;

- new information is assimilated, sometimes erroneously, into existing mental models; and

- conflicting information is often dismissed or ignored.

Therefore, since all people observe the same information with inherent and different biases, Heuer believes an effective analysis method needs a few safeguards. The analysis method should:

- encourage products that clearly show their assumptions and chains of inferences; and

- emphasize procedures that expose alternative points of view.

What is required of analysts is a process for challenging, refining, and challenging their own working mental models. This is a key component of his Structured Analytic Techniques (SATs), which include Analysis of Competing Hypotheses.

These problems notwithstanding, cognitive patterns are critical to allowing individuals to process what otherwise would be an incomprehensible volume of information. Yet, they can cause analysts to overlook, reject, or forget important incoming or missing information that is not in accord with their assumptions and expectations. Seasoned analysts may be more susceptible to these mindset problems as a result of their expertise and past success in using time-tested mental models.

Answers

Answer #1: The article is written twice in each of the three phrases. This is commonly overlooked because perception is influenced by our expectations about how these familiar phrases are normally written.

Answer #2: The above figure illustrates that mind-sets tend to be quick to form but resistant to change by showing part of a longer series of progressively modified drawings that change almost imperceptibly from a man into a woman. The right-hand drawing in the top row, when viewed alone, has equal chances of being perceived as a man or a woman.

Answer #3: The old woman’s nose, mouth, and eye are, respectively, the young woman’s chin, necklace, and ear. The old woman is seen in profile looking left. The young woman is also looking left, but we see her mainly from behind so most facial features are not visible. Her eyelash, nose, and the curve of her cheek may be seen just above the old woman’s nose.

The Structured Analytic Techniques "Toolbox"

Structured analytic techniques are simply a "box of tools" to help the analyst mitigate the adverse impact on analysis of one's cognitive limitations and pitfalls. Taken alone, they do not constitute an analytic method for solving geospatial analytic problems. The most distinctive characteristic is that structured techniques help to decompose one's geospatial thinking in a manner that enables it to be reviewed, documented, and critiqued. "A Tradecraft Primer: Structured Analytic Techniques for Improving Intelligence Analysis [10]" (CIA, 2009) highlights a few structured analytic techniques used in the private sector, academia, and the intelligence profession.