2.6 3D and VR Application Building Workflows

Typically, 3D models are created with a concrete final application or product in mind. This could be presentational material for a web site, a standalone 3D application like a virtual city tour or computer game, a VR application intended to be run on one of the different consumer VR platforms available today, or some spatial analysis to be performed with the created model to support future decision making, to name just a few examples. In many of these application scenarios, a user is supposed to interact with the created 3D model. Interaction options range from passively observing the model, for example, as an image, animation, or movie, over being able to navigate within the model, to being able to actively measure and manipulate parts of the model. Usually, the workflows to create the final product require many steps and involve several different software tools, including 3D modeling software, 3D application building software like game engines, 3D spatial analysis tools, 3D presentation tools and platforms, and different target platforms and devices.

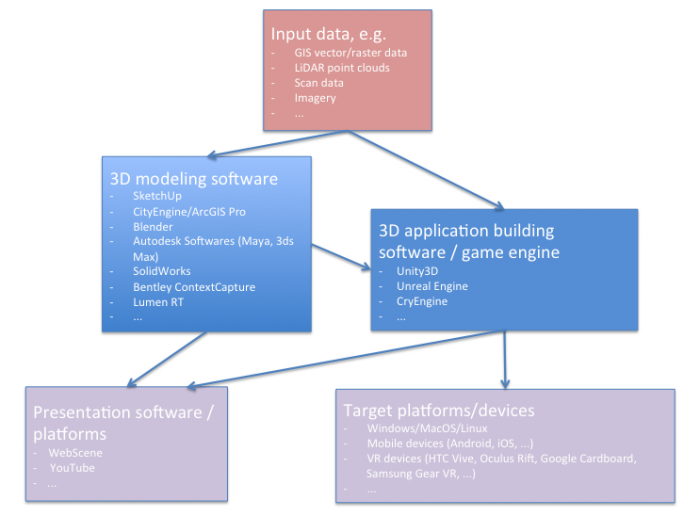

The figure below illustrates how 3D application building workflows could look like on a general level: The workflow potentially starts with some measured or otherwise obtained input data that is imported into one or more 3D modeling tools, keeping in mind such input could also simply be sketches or single photos. Using 3D modeling tools, 3D models are created manually or using automatic computational methods, or a combination of both. The created models can then either be used as input for some spatial analysis tools, directly used with some presentational tools, for example, to embed an animated version of the model on a web page, or the models are exported/imported into a 3D application building software, which typically are game engines such as Unity3D or the Unreal engine, to build a 3D application around the models. The result from the 3D application building work can again be a static output like a movie to be used as presentation material, or an interactive 3D or VR application built for one of many available target platforms or devices, for example, a Windows/macOS/Linux stand-alone 3D application, an application for a mobile device (for instance, Android or iOS-based), or a VR application for one of the many available VR devices. The figure below is an idealization in that the workflows in reality typically are not as linear as indicated by the arrows. Often it is required to go back one or more steps, for instance from the 3D application building phase to the 3D modeling phase, to modify the previous outputs based on experiences made or problems encountered in later phases. It is also possible that the project is conducted by working on several stages in parallel, such as creating multiple levels of detail (LoD) 3D models to work with the intended platform. Moreover, while we list example software for the different areas in the figure, these lists are definitely not exhaustive and, in addition, existing 3D software often covers several of these areas. For instance, 3D modeling software often allows for creating animation such as camera flights through a scene, or in the case of Esri's CityEngine/ArcGIS Pro allow for running different analyses within the constructed model.

A schematic diagram outlines the following information (in summary):

- Input data (in a variety of formats) imported into either a 3D modeling software or a 3D application building software such as a game engine.

- In 3D modeling software, 3D models are created either manually or automatically based on computational methods The resulting models could be either directly used for visualization (i.e. on the web) or imported into 3D application building software such as Unity3D for more elaborate functionalities.

- The application made from game engines (e.g. Unity 3D) can be deployed on both web and a variety of platforms including VR headsets.

- Depending on the application and the deployment platform attributes of models such as levels of detail (LoD) can be manipulated

A 3D modeling project can also aim at several or even many target formats and platforms simultaneously. For instance, for the PSU campus modeling project, that we are using as an example in this course, the goal could be to produce (a) images and animated models of individual buildings for a web presentation, (b) a 360° movie of a camera flight over the campus that can be shared via YouTube and also be watched in 3D with the Google Cardboard, (c) a standalone Windows application showing the same camera flight as in the 360° video, (d) a similar standalone application for Android-based smartphone but one where the user can look around by tilting and panning the phone, and (e) VR applications for the Oculus Rift and HTC Vive where the user can observe and walk around in the model.

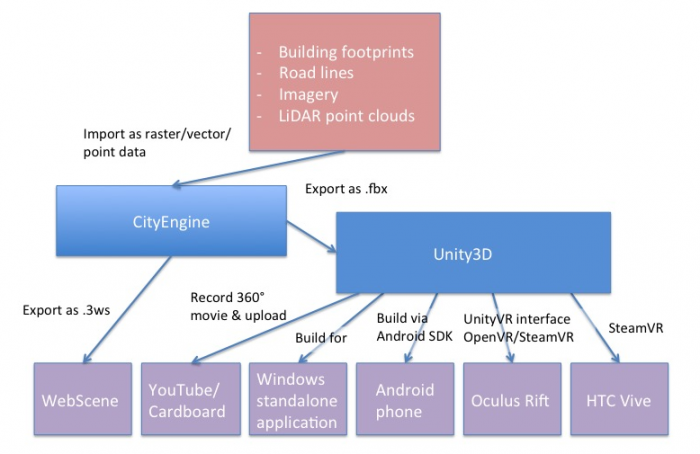

The next figure illustrates the concrete workflow for this project (again in an idealized linear order) with the actual software tools used and providing more details on the interfaces between the different stages. The main input data for the campus model is extracted from Airborne LiDAR point clouds. Based on this data, building footprints, buildings, and trees height, Digital Elevation Model (DEM) are extracted. Roads (streets and walkways) are 2D shapefiles received from the Office of Physical Plant at PSU. Roof shapes and tree types information are collected based on surveys. DEM which is in raster format and other data that are in vector format (polygons, lines, and points) are imported to CityEngine which is a generation engine for creating procedural models. Procedural modeling using CityEngine will be explained in more detail in Lesson 4. The result of the procedural model can be shared on the web as an ArcGIS Web Scene for visualization and presentation. It can also be exported to 3D/VR application building software.

A schematic diagram outlines the following information (in summary):

- Extraction of mode data from LiDAR point cloud.

- Digital Elevation Model (DEM) are imported into CityEngine for generation of procedural models.

- Result can be shared on the web as an ArcGIS Web Scene, or exported to 3D/VR application building software such as Unity3D.

The 3D/VR application building software we are going to use in this course will be the Unity3D game engine (Lessons 8 and 9). A game engine is a software system for creating video games, often both 2D and 3D games. However, the maturity and flexibility of this software allows for implementing a wide range of 3D and now VR applications. The entertainment industry has always been one of the driving forces of 3D and VR technology, so it is probably not surprising that some of the most advanced software tools to build 3D applications come from this area. A game engine typically comprises an engine to render 3D scenes in high-quality, an engine for showing animations, a physics and collision detection engine as well as additional systems for sound, networking, etc. Hence, it saves the game/VR developer from having to implement these sophisticated algorithmic software modules him- or herself. In addition, game engines usually have a visual editor that allows for producing games (or other 3D application) even by non-programmers via mouse interaction rather than coding, as well as a scripting interface in which all required game code can be written and integrated with the rest of the game engine or in which tedious scene building tasks can be automated.

Unity can import 3D models in many formats. A model created with CityEngine can, for instance, be exported and then imported into Unity using the Autodesk FBX format. SketchUp models can, for instance, be imported as COLLADA .dae files. In the context of our PSU campus project, Unity3D then allows us to do things like creating a pre-programmed camera flight over the scene, placing a user-controlled avatar in the scene, and implementing dynamics (e.g., think of day/night and weather changes, computer-controlled moving object like humans or cars) and user interactions (e.g., teleporting to different locations, scene changes like when entering a building, retrieving additional information like building information or route directions, and so on).

The different extensions and plugins available for Unity allow for recording images and movies of an (animated) scene as well aw to build final 3D applications for all common operating systems and many VR devices. For instance, you will learn how to record 360° images for each frame of a camera flight over the campus in Unity. These images can be stitched together to form a 360° movie that can be uploaded to YouTube and from there be watched in 3D on a smartphone by using the Google Cardboard VR device (or on any other VR device able to work with 360° movies directly). You will also learn how to build a standalone application from a 3D project that can be run on Windows outside of the Unity editor. Another way to deploy the campus project is by building a standalone app for an Android-based phone. Unity has the built-in functionality to produce Android apps and push them to a connected phone via the Android SDK (e.g., coming with Android Studio). Finally, Unity can interface with VR devices such as the Oculus Rift and HTC Vive to directly have the scene rendered to the head-mounted displays of these devices, interact with the respective controllers, etc. There exist several Unity extensions for such VR devices and the VR support is continuously improved and streamlined with each new release of Unity. SteamVR and the OVR, and VRTK for Unity currently take a central role for building Unity applications for the Rift and the Vive.