3D Applications in Unity

In Lesson 2, we introduced 3D application building software like Unity as part of a general 3D/VR application building workflow. The main role of 3D application building software can be seen in providing the tools to combine different 3D models into a single application, to add dynamics (and, as a result, making the model more realistic), and to design ways for a user to interact with the 3D environment. We also learned that 3D application building software typically provides support to build stand-alone applications for different target platforms including common desktop operating systems, different mobile platforms, and also different VR devices.

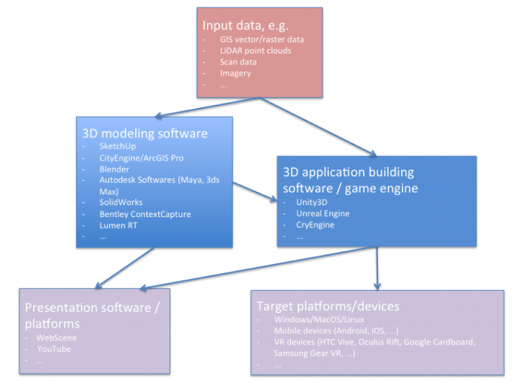

A schematic diagram outlines the following information (in summary):

-

Input data (in a variety of formats) imported into either a 3D modeling software or a 3D application building software such as a game engine

-

In 3D modeling software, 3D models are created either manually or automatically based on computational methods

-

The resulting models could be either directly used for visualization (i.e. on the web) or imported into 3D application building software such as Unity3D for more elaborate functionalities

-

The application made from game engines (e.g. Unity 3D) can be deployed on both web and a variety of platforms including VR headsets

In the previous lesson, you already use some of the tools available in Unity for adding dynamics and interaction possibilities to a 3D scene in the form of giving the user control over the movement of gameobjects, animating gameobjects via scripting, collision detection, basic graphical user interface, and scripting more advanced behaviors, e.g. collecting coins and awarding points. As you have already experienced, adding behavior to your gameobjects is done either through standard unity components (e.g. rigidbody, colliders, etc.) or through scripting.

In this lesson, you will gain more practical experience with other tools available in Unity. But first, let's start the lesson with a brief overview of general tools utilized by Unity for 3D application building. As we go through this first part of the lesson, you will begin to realize that you have already used some of these tools in the previous lesson! We roughly divided the following list into ways to make a scene dynamic and ways to add interactions. However, this is a very rough distinction with certain aspects or tools contributing to both groups. In particular, scripting can be involved in all of these aspects and can be seen as a general-purpose tool capable of extending Unity in almost unlimited ways (e.g. in the previous lesson we used scripting to both animate our coins by constantly rotating them, as well as collecting them upon collision with the ball).

Tools to make a scene dynamic:

-

Animations: Animations obviously are one of the main tools to make a scene dynamic. We will talk about animations quite a bit in this lesson and you will create your own animation clip to realize a camera flight over a scene. Here are a few examples of animations that can make a scene more realistic and dynamic:

- animations of otherwise static objects to make them appear more realistic, e.g. a plant or flag moving slightly as if affected by wind, opening and closing animations of a door

- animations to simulate time of day: This can largely be accomplished by using animation to move a Directional Light object along a pre-programmed trajectory to simulate the movement of the sun

- animation to move the main camera along a pre-programmed trajectory (see the third walkthrough of this lesson)

- animations for movement of dynamic objects populating the scene, e.g. humans or cars moving through the scene in different ways (walking vs. running) and along different trajectories

- State machines: Many objects in a dynamic 3D environment can always be in one of several possible states. For instance, a traffic light may be in the states red, yellow, green; a car may be either parked, standing still with engine running, or driving; and an artificial humanoid avatar in the scene can have many possible states associated with such as sitting, standing, walking, running, reaching, etc. The transition between two possible states of a GameObject can be triggered by events. For instance, a human avatar can be programmed to switch from the "sitting" state to the "standing" state when another humanoid avatar enters the same room. State machines are the Unity tool for realizing this idea and are often used to provide acting agents with some behavior, potentially involving AI methods to make the behavior appear realistic. Often, each state in the state machine of a GameObject is associated with a particular animation.

- Particle systems: While animations allow for adding dynamics to solid objects modeled as 3D meshes, there are visible effects in a dynamic environment that do not lend themselves to be modeled and handled in this way. Examples are smoke and flames, liquids, clouds, rain, and snow, etc. Particle systems are the most common approach to model these things and can be easily created in Unity. A particle system consists of several, up to a large number of particles, which are simple images or meshes, that are moved and displayed together, for instance, to form the impression of smoke. A particle system has many parameters to control the properties of the particles, their movement and dynamics, their lifetime, etc.

- Physics: Physics is an important part of a really dynamic scene and needed to simulate the behavior of objects under gravity, collisions, and other forces. Unity's physics engine is capable of simulating the behavior of solid objects under these forces. You have already used some parameters of the physics engine in the previous lesson including rigidbody and collider.

- Sound: Audio is another important input mode to make a 3D scene appear more realistic and increase its immersiveness. Sometimes you may also just want to add some nice music in the background of your application or add some commentary that is played to explain something in the scene or give instructions to the user. Unity's audio support is essentially based on the idea of attaching AudioListener and AudioSource components to GameObjects in the scene. The AudioListener is typically attached to the main camera. An AudioSource attached to an object can be set up so that the played audio track gets louder when the camera approaches that object. Unity supports real 3D spatial sound which also allows the user to tell which direction a sound is coming from. You have already used an example of the AudioSource in the previous lesson for coin collection.

Tools to add interactions:

- User input: We already saw how keyboard input can be used to control the movement of an object (the ball in our previous lesson) in the scene based on some scripting and manipulation of the transformation parameters of an object. Similarly, user input can be used to interact with the objects in the scene in some other way such as opening doors, pushing/pulling some other object, pressing a button, etc.Unity's Input Manager can be used to configure the input devices for a project. We will see the use of mouse as in input controller in the next lesson.

- GUI: 3D applications still make use of classical 2D graphical user interface (GUI) elements to display information to the user, provide selection choices, or collect other user input. For instance, a GUI menu could be used to allow the user to immediately jump to one of several pre-defined locations in the nice or a dialog box can be used to allow the user to enter input values for important variables like movement speed. However, Unity's UI system is a bit out of the scope of this introductory course. The Unity web page contains a lot of video tutorials explaining the different UI elements if you are interested in this aspect. We have already experienced creating a very simple GUI label that holds the value of the “points” awarded to a player in the previous lesson. In addition, here is a very short video demonstrating a simple Unity UI for triggering different animation states of a face, connecting back to the things we discussed above, regarding animations and state machines:Overview of An Interactive Interface Demo in Unity. The Unity Assets Store contains thousand of assets related to creating menus and GUI in general.

- Complex 3D/VR interactions: IIn particular with the advent of special VR controllers to complement consumer-level head-mounted displays, new ways to interact with objects in a 3D or VR setting are conceived every day. Modern VR controllers, such as the HTC Vive Controller, not only combine buttons, trackpads, and triggers that allow for triggering interactions by certain clicks, but the fact that the position and orientation of the controllers in the room are tracked allows for completely new interactions by touching or pointing at objects in the scene, or by performing complex gestures with the controllers, e.g. for moving, rotating, or scaling other objects, teleporting in the scene, or measuring distances or volumes. Template scripts for many of these interactions are already publicly available. Devising and implementing completely new ways to interact with objects in the scene typically requires quite a bit coding though.

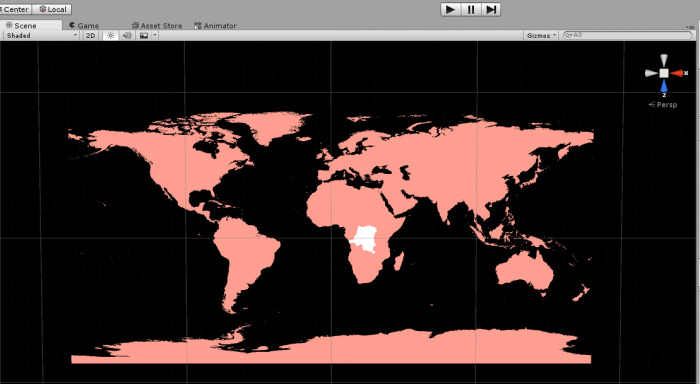

Since this is a Geography/GIS class, we also want to briefly mention that support for importing GIS data into 3D modeling and application building software to employ it as part of 3D or VR application is slowly increasing. The image below shows a world map GameObject consisting of meshes for all countries in the Unity editor. The meshes were created from an ESRI shapefile using BlenderGIS, an add-on for importing/exporting GIS data into Blender. Then the meshes were exported and imported into Unity as an Autodesk .fbx file.

Recently, Mapbox announced the Mapbox Unity SDK, providing direct access to Mapbox hosted GIS data and Mapbox APIs within Unity. We believe that more initiatives like this can be expected for the near future, partially driven by the huge potential that current developments in VR and AR technology offer to GIS software developers.