Extended Reality

Related to 3D visualization is a new system of technologies that has quickly gained attention in recent years: extended reality. Extended reality is an umbrella term that encompasses several related technologies including virtual reality and augmented reality. You have likely seen examples of these technologies used for sports and gaming—examples include Pokémon GO, an augmented reality mobile game, and Samsung’s Oculus Rift virtual reality headset.

Student Reflection

Even the yellow first down line you see on the football field during NFL games is an example of augmented reality. Watch the video about it: How the NFL's magic yellow line works. Can you think of an environmental or emergency management scenario in which similar technology might be useful?

MacEachren and colleagues (1999), based on the work of Michael Heim (1998), categorized extended reality technologies by “the four I’s” – immersion, interactivity, information intensity, and intelligence of objects. It may be helpful to consider these factors as we discuss extended reality technologies. The most common way to categorize these tools, however, is via a continuum of immersion, from augmented reality (i.e., the overlay of objects onto the real world), to virtual reality (i.e., full immersion in an imagined space).

As it is the most commonly-used term and a sufficient label for this technology, we will use the term virtual reality when discussing immersive computer-modeled environments. Note, however, that some scholars have used the term virtual environments instead. A virtual environment is a defined three-dimensional computer-simulated environment that enables user navigation and interaction (Slocum et al. 2009). The reason this term is occasionally preferred over virtual reality is that virtual environments often depict imagined things—for example, by visualizing Earth’s ozone layer, which is not actually visible to the human eye, and thus not a part of reality (Slocum et al. 2009).

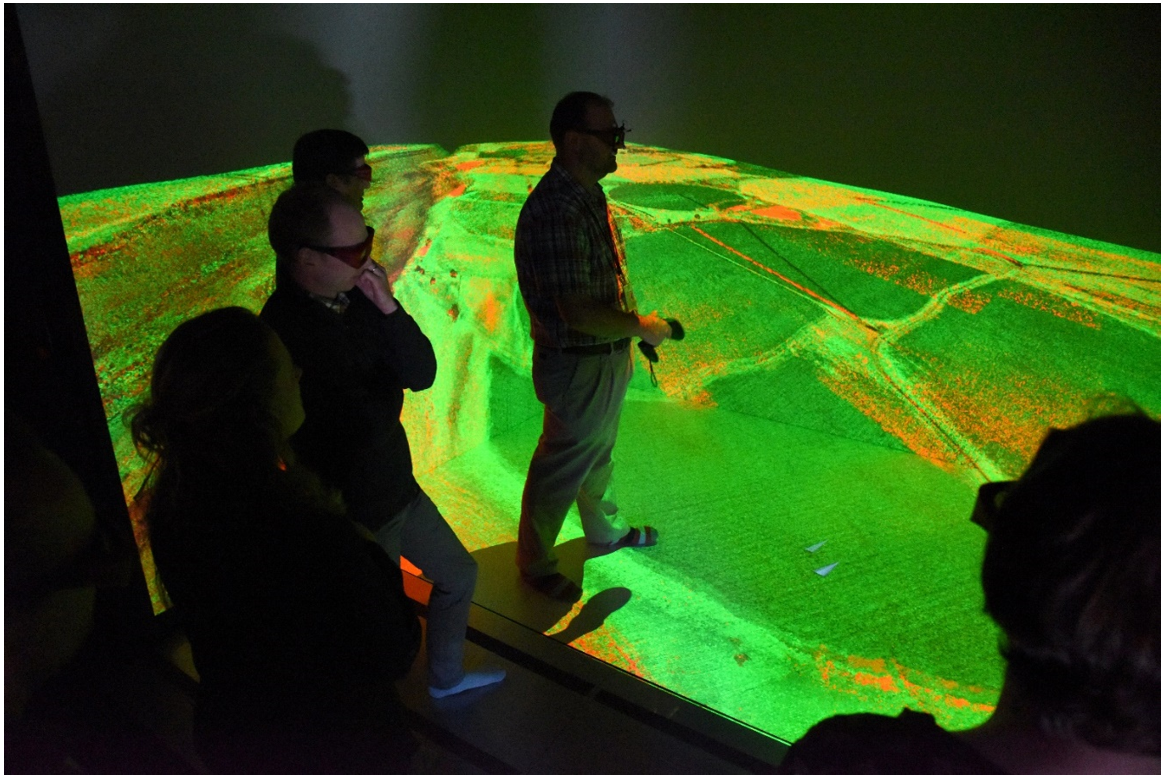

Applications of extended reality in geography include creating virtual cities, virtual field trips, digital globes, and more. Shown in Figure 8.8.2 is a Computer-Assisted Virtual Environment (CAVE) from the Idaho National Laboratory.

Similar projects have been developed here at Penn State. In broadest terms, virtual reality is being used to permit travel to locations otherwise inaccessible to users. Immersive Technologies for Archaeology, for example, is a project that brings users to the Mayan ruins at Cahal Pech in Belize. Future plans for the project include completing a historical model of the Mayan city—permitting users to view not only a place but also a time—that they would otherwise be unable to inhabit. Other projects, such as Visualizing Forest Futures (VIFF; Figure 8.8.3) extend in the opposite direction, giving users a view of the projected future of forests, based on possible future climate scenarios.

Student Reflection

Think back to the first topic introduced in this lesson: cartographic generalization. How do we resolve the differences between the premise of generalization and the goals of VR?

As mentioned previously, not all extended reality is fully immersive—in fact, the fastest-growing type of extended reality is augmented reality (AR). Social media applications such as Snapchat use augmented reality as entertainment value, but AR can also be used in education and research applications. Figure 8.8.4 below shows the application Obelisk AR, developed at Penn State. Users can use the app to interact with a real-world object (The Obelisk) in a mobile environment. Tapping on a stone on the Obelisk, for example, brings up a pop-up on the user’s smartphone screen that explains the type and origin of the type of stone selected.

Augmented reality has also shown incredible potential for navigational and wayfinding applications. Earlier in this lesson, we discussed personal navigational devices in the context of passive interactivity. In some cases, augmented reality has taken such navigational devices to the next level. Pilots, for example, are often assisted via heads-up displays (Figure 8.8.5). These displays show crucial information overlaid across the environment, providing a better decision-making tool than a separate digital display.

Heads-up displays are also used for automobile navigation; such displays are offered by some luxury vehicle navigational systems, such as the one in Figure 8.8.6. Unlike AR pilot navigation systems, automobile heads-up navigation displays are not in widespread use. Increasing accessibility and affordability of these technologies, however, may result in them being the way of the future.

Watch this video of a car using a heads-up navigation display as it drives through intersections and accelerates onto a highway (2:02).